Tagged "artificial intelligence"

Becoming Live – A Swarm-Controlled Sampler

Becoming is an algorithmic composition program written in java, that builds upon some of John Cage's frequently employed compositional processes. Cage often used the idea of a "gamut" in his compositions. A gamut could be a collection of musical fragments, or a collection of sounds, or a collection of instruments. Often, he would arrange the gamut visually on a graph, then use that graph to piece together the final output of a piece. Early in his career, he often used a set of rules or equations to determine how the output would relate to the graph. Around 1949, during the composition of the piano concerto, he began using chance to decide how music would be assembled from the graph and gamut.

In Becoming, I directly borrow Cage's gamut and graph concepts; however, the software assembles music using concepts from the AI subfield of swarm intelligence. I place a number of agents on the graph and, rather than dictating their motions from a top-down rule-based approach, the music grows in a bottom-up fashion based on local decisions made by each agent. Each agent has preferences that determine their movement around the graph. These values dictate how likely the agent is to move toward food, how likely the agent is to move toward the swarm, and how likely the performer is to avoid the predator.

Black Allegheny is an album of music created using swarm intelligence

Black Allegheny is a collection of pieces composed using swarm intelligence. Each piece in the album was assembled using custom software that tracks the motion of a virtual herd, and relates that information to pre-selected audio files. This software is called becoming, and it was born from an exploration of Cage's graph and gamut techniques. These techniques were expanded into the domain of digital audio, then swarm algorithms were added.

Mailbox and A Handwritten Letter to the World Wide Web at Cyborgs Post Human Culture show in Kamloops, BC

Shortly, two of my pieces will be performed at Cyborgs Posthuman Culture Show in Kamloops, BC.

Machine Song 1 at CSUF New Music Festival

Shortly, Machine Song 1 will be performed at the CSUF New Music Festival 2013.

Creativity in Algorithmic Music

In this essay I am going to review the topic of creativity in algorithmic music [1], focusing on three perspectives on creativity offered by three groups of composers. The first section will review the definition of creativity offered by computational psychologist Margaret Boden. The second section will examine one possible measure of creativity. The next section will look at three different composers, their attitudes toward creativity and the way their algorithms embody those attitudes. Finally, I will critically examine the core questions that are being asked by algorithmic composers.

The End of My Career at SoundWalk Long Beach This Weekend

This week Erin and I will be down in Long Beach to participate in SoundWalk 2013. The End of My Career will be played at one of the locations.

Disconnected, Algorithmic Sound Collages from Web API

I'm pleased to announce the release of Disconnected, an album of algorithmic sound collages generated by pulling sounds from the web.

I prefer to call this album semi-algorithmic because some of the music is purely software-generated, while other pieces are a collaboration between the software and myself. Tracks four and six are purely algorithmic, while the other tracks are a mix of software-generated material and more traditionally composed material.

The software used in the sound collage pieces (1, 3, 4, 6) was inspired by Melissa Schilling's Small World Network Model of Cognitive Insight. Her theory essentially says that moments of cognitive insight, or creativity, occur whenever a connection is made between previously distantly related ideas. In graph theory, these types of connections are called bridges, and they have the effect of bringing entire neighborhoods of ideas closer together.

I applied Schilling's theory to sounds from freesound.org. My software searches for neighborhoods of sounds that are related by aural similarity and stores them in a graph of sounds. These sounds are then connected with more distant sounds via lexical connections from wordnik.com. These lexical connections are bridges, or moments of creativity. This process is detailed in the paper Composing with All Sound Using the FreeSound and Wordnik APIs.

Finally, these sound graphs must be activated to generate sound collages. I used a modified boids algorithm to allow a swarm to move over the sound graph. Sounds were triggered whenever the population on a vertex surpassed a threshold.

Ad Hoc Artificial Intelligence in Algorithmic Music Composition

On October 4, I will be presenting at the 3rd Annual Workshop on Musical Metacreation. I will be presenting a paper on the ways that AI practitioners have used ad hoc methods in algorithmic music programs, and what that means for the field of computational creativity. The paper is titled Implications of Ad Hoc Artificial Intelligence in Music Composition.

This paper is an examination of several well-known applications of artificial intelligence in music generation. The algorithms in EMI, GenJam, WolframTones, and Swarm Music are examined in pursuit of ad hoc modifications. Based on these programs, it is clear that ad hoc modifications occur in most algorithmic music programs. We must keep this in mind when generalizing about computational creativity based on these programs. Ad hoc algorithms model a specific task, rather than a general creative algorithm. The musical metacreation discourse could benefit from the skepticism of the procedural content practitioners at AIIDE.

The workshop is taking place on the North Carolina State University campus in Raleigh, NC. Other presenters include Andie Sigler, Tom Stoll, Arne Eigenfeldt, and fellow NIU alumnus Tony Reimer.

Seven Solos Composed by Cellular Automata

I've collected several pieces composed using cellular automata into one package.

This collection of seven solos for any instrument was created by computer programs that simulate cellular automata. A cellular automaton is a mathematical system containing many cellular units that change over time according to a predetermined rule set. The most famous cellular automaton is Conway's Game of Life. Cellular automata such as Conway's Game of Life and the ones used to compose these pieces are capable of generating complex patterns from a very small set of rules. These solos were created by mapping elementary cellular automata to music data. One automaton was mapped to pitch data and a second automaton was mapped to rhythm data. A unique rule set was crafted to generate unique patterns for each piece.

Download the score for Seven Solos Composed by Cellular Automata for any instrument by Evan X. Merz.

Two Duets Composed by Cellular Automata

In the past few days I've completed several programs that compose rather nice notated music using cellular automata. Yesterday I posted seven solos generated by cellular automata. Today I am following up with two duets. Like the solos, these pieces were generated using elementary cellular automata.

All of these pieces look rather naked. In the past I've added tempo, dynamics, and articulations to algorithmic pieces where the computer only generated pitches and durations. Lately I feel like it's best to present the performer with exactly what was generated, and leave the rest up to the performer. So these pieces are a bit more like sketches, in the sense that the performer will fill out some of the details.

Download the score for Two Duets Composed by Cellular Automata for any instruments by Evan X. Merz.

Why I Created QuizMana.com

When I was teaching at UCSC and SJSU, I taught very large courses, often consisting of over 200 students. Courses that size create lots of infrastructural problems. Communication is a huge problem, but that's mostly taken care of by Canvas or Blackboard. The bigger problem for me was assessments.

I think that even well-written multiple choice quizzes are not great assessments. In multiple choice quizzes, students always have the answer presented to them. Even very difficult multiple choice quizzes never ask students to explain their reasoning. They never force students to come up with a concept from memory. They present students with the false premise that knowledge is fixed, and is simply a matter of choosing from what already exists.

So I always wanted to use other types of questions, and I did. Or at least I tried to. To incorporate even one short answer question meant hours of grading. I once did a single essay assignment for a large class, and probably spent over forty hours grading essays.

I needed a grading tool that could quickly save me time, while allowing me to diversify the types of assessments I could use on a week-to-week basis. For the past three months I've been building a website that automatically grades short answer, essay, and multiple choice questions. I call it QuizMana.com, and it's accepting applicants for the closed beta right now.

The hook of the website is automatic grading, but the purpose of it is to save teachers as much time as possible. So the grader gives a score for every question, but the site then points the teacher to all the "low confidence" scores. These are the scores where the grader didn't have enough information to be fully confident it gave an accurate score. The teacher can then grade the low confidence responses on a single page, and then they are done!

So the grader is part of a larger process that means that teachers only grade the work that actually needs their attention.

I think this can be a great tool, and I think it will save teachers a lot of time. If you're teaching a large class this semester, then give yourself a new tool, and try QuizMana.

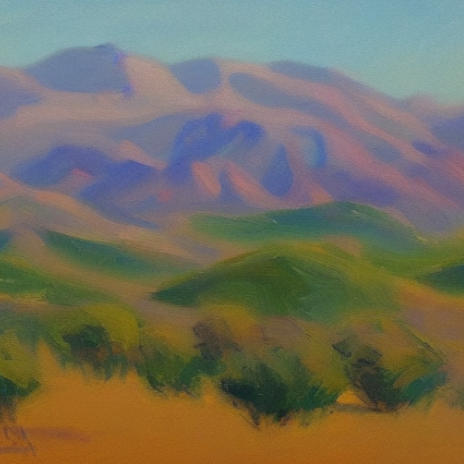

Initial Experiments with Make-a-Scene by Meta

Last week Meta announced their entry into the field of artifical intelligence image generation. Their new tool is called Make-a-Scene and it takes both an image and a line of text as prompts. It's a more collaborative tool than some of the other image generators made by other companies. A person can sketch out a rough scene as input, then use text to tell Make-a-Scene how to fill it in.

Make-a-Scene isn't yet open to the public, but as a Meta employee, I was able to get my hands on it early. In this post, I'm going to show you my first experiments with Make-a-Scene, and you can see how it compares to the other image generation tools.

As a lover of California landscapes, and a collector of the painters known as the California Impressionists, I had to start by trying to generate some California landscapes. I drew an image with a sky, a mountain, a river, and a fence. Then I gave it the prompt "an impressionist painting of california". This image shows the input along with four generated images.

I particularly liked the first two images.

As you can see, Make-a-Scene tries to follow the image input as closely as possible, and it's able to interpret the phrase "impressionist painting" in many different ways.

Next, I fed it the much more specific prompt of "Emperor Palpatine training Anakin Skywalker". As you can see from the generated images, it struggled much more to understand both my poor drawing, and the very specific text prompt.

You can see how my drawing led the AI astray in the generated images. I included lightsabers in sections of the image labeled as "person" so Make-a-Scene added some funky looking arms onto the people. Interestingly, it didn't necessarily understand who the fictional characters were, but it knew that they were soldiers.

For my last experiment, I went back to something more generic. I though about the type of images that marketers might need. I drew a picture of a person-shaped blob holding a spoon-shaped blob, and gave it the prompt "a woman eating breakfast". The results are trippy, but interesting.

The first image it generated looks almost like usable clip art.

Overall, I think Make-a-Scene is interesting and fun. I think, even in this early state, it has some real possibility for generating art in some situations. I think it would be particularly good at creating trippy art for album covers or single artwork on music streaming sites. I also think it could be useful for brainstorming visual ideas about characters, concepts, and even fiction. I hope that Meta opens it up to the public soon.

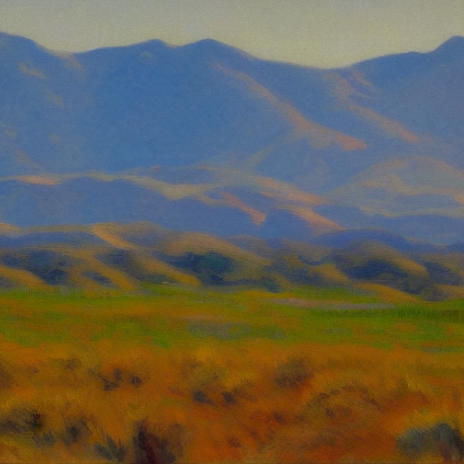

How to set up stable diffusion on Windows

In this post, I'm going to show you how you can generate images using the stable diffusion model on a Windows computer.

How to set up stable diffusion on Windows

This tutorial is based on the stable diffusion tutorial from keras.io. I started with that tutorial because it is relatively system agnostic, and because it uses optimizations that will help on my low powered Windows machine. We only need to make a few modifications to that tutorial to get everything to work on Windows.

Steps

- Install python. First, check if python is installed using the following command:

python --version

If you already have python, then you can skip this step. Otherwise, type 'python' again to trigger the Windows Store installation process.

- Install pip. You can install pip by downloading the installation script at https://bootstrap.pypa.io/get-pip.py. Run it using the following command.

python get-pip.py

- Enable long file path support. This can be done in multiple ways. I think the easiest is to run PowerShell as administrator, then run the following command:

New-ItemProperty -Path "HKLM:\SYSTEM\CurrentControlSet\Control\FileSystem" `

-Name "LongPathsEnabled" -Value 1 -PropertyType DWORD -Force

- Install dependencies. I had to manually install a few dependencies. Use the following command to install all needed dependencies.

pip install keras-cv tensorflow tensorflow_datasets matplotlib

- Save the code to a file. Save the following code into a file called whatever you want. I called mine 'stable-diffusion.py'.

import time

import keras_cv

from tensorflow import keras

import matplotlib.pyplot as plt

model = keras_cv.models.StableDiffusion(img_width=512, img_height=512)

images = model.text_to_image("california impressionist landscape showing distant mountains", batch_size=1)

def plot_images(images):

plt.figure(figsize=(20, 20))

for i in range(len(images)):

ax = plt.subplot(1, len(images), i + 1)

plt.imshow(images[i])

plt.axis("off")

plot_images(images)

plt.show()

- Run the code.

python stable-diffusion.py

- Enjoy. To change the prompt, simply alter the string that is fed into 'model.text_to_image' on line 8. Here are my first images created using this method and the given prompt.