Tagged "music"

Wii Play the Drums

Now with nunchuk support, so you can be both a drummer AND a ninja!

MapHub Virtual Instrument

A demonstration of my virtual instrument based on geo-tagged calls from maphub.org

Dad's Got Ninjas – Alex's Monster

I wrote the score for this terrific toon by Mike Kalec and Ryan Kemp.

Becoming Live – A Swarm-Controlled Sampler

Becoming is an algorithmic composition program written in java, that builds upon some of John Cage's frequently employed compositional processes. Cage often used the idea of a "gamut" in his compositions. A gamut could be a collection of musical fragments, or a collection of sounds, or a collection of instruments. Often, he would arrange the gamut visually on a graph, then use that graph to piece together the final output of a piece. Early in his career, he often used a set of rules or equations to determine how the output would relate to the graph. Around 1949, during the composition of the piano concerto, he began using chance to decide how music would be assembled from the graph and gamut.

In Becoming, I directly borrow Cage's gamut and graph concepts; however, the software assembles music using concepts from the AI subfield of swarm intelligence. I place a number of agents on the graph and, rather than dictating their motions from a top-down rule-based approach, the music grows in a bottom-up fashion based on local decisions made by each agent. Each agent has preferences that determine their movement around the graph. These values dictate how likely the agent is to move toward food, how likely the agent is to move toward the swarm, and how likely the performer is to avoid the predator.

Black Allegheny is an album of music created using swarm intelligence

Black Allegheny is a collection of pieces composed using swarm intelligence. Each piece in the album was assembled using custom software that tracks the motion of a virtual herd, and relates that information to pre-selected audio files. This software is called becoming, and it was born from an exploration of Cage's graph and gamut techniques. These techniques were expanded into the domain of digital audio, then swarm algorithms were added.

Cannot Connect

"Cannot Connect" is a problem for both computers and for people. When dealing with technology, we receive this message when we try to use something new. For people, this can be a problem in every sort of relationship.

The keyboard is a tool that people use every day to try to connect with other people. Through blogs, tweets, prose and poetry, we try to engage other humans through our work at the keyboard.

In this piece, the performer attempts to connect to both the computer and the audience through the keyboard. The software presents a randomized electronic instrument each time it is started. It selects from a palette of samples, synthesizers and signal processing effects. The performer must feel out the new performance environment and use it to connect to the audience by typing free association verse.

Letting it Go to Voicemail

A multimedia piece generated by pushing the same buffer to both the speakers and the screen (as a line drawing). The live generative version is slightly better than the YouTube render below.

Search by Image at Currents 2012

Search by Image is being played at Currents Sante Fe this month.

The World in 3D

When I saw J. Dearden Holmes' 3D pictures from the 1920s presented as animated gifs, I was struck by the incongruity between the images and their presentation. These were photographs shot in the 1920s that were meant to be viewed on a stereoscope. Yet they were being presented online as animated gifs, a format that didn't come into existence until the 1990s. This incongruity inspired me to write incongruous music. So I embedded multiple incongruities in the music: incongruities between the music and itself, as well as incongruities between the music and the images.

To the Towers and the Satellites

Here's a video of a recent performance of one of my pieces called To the Towers and the Satellites. Music composed by Evan X. Merz. Sculpture and Stage by Sudhu Tewari. Movement by Nuria Bowart and Shira Yaziv.

Buy Transient on Bandcamp

I'm so pleased to announce the release of a new album of experimental electronic music called Transient.

Transient is a set of music that I wrote in the summer I moved from Chicago to San Jose. At the time, I was experimenting with custom algorithmic audio slicing and glitching software, and you can hear this on many of the tracks.

Mailbox and A Handwritten Letter to the World Wide Web at Cyborgs Post Human Culture show in Kamloops, BC

Shortly, two of my pieces will be performed at Cyborgs Posthuman Culture Show in Kamloops, BC.

Machine Song 1 at CSUF New Music Festival

Shortly, Machine Song 1 will be performed at the CSUF New Music Festival 2013.

Creativity in Algorithmic Music

In this essay I am going to review the topic of creativity in algorithmic music [1], focusing on three perspectives on creativity offered by three groups of composers. The first section will review the definition of creativity offered by computational psychologist Margaret Boden. The second section will examine one possible measure of creativity. The next section will look at three different composers, their attitudes toward creativity and the way their algorithms embody those attitudes. Finally, I will critically examine the core questions that are being asked by algorithmic composers.

Office Problems #80 – Stealing Breast Milk

I really enjoyed working with Brandon Buczek on the score for the newest episode of Office Problems.

What Will Come from the Vacuum

This week What Will Come from the Vacuum is being played in Trauenkirchen, Germany, as part of a exhibition called Physik und Musik. This piece was written as a response to images of scientific equipment.

Sonifying Processing

Sonifying Processing shows students and artists how to bring sound into their Processing programs. It takes a hands-on approach, incorporating examples into every topic that is discussed. Each section of the book explains a sound programming concept then demonstrates it in code. The examples build from simple synthesizers in the first few chapters, to more complex sound-manglers as the book progresses. Each step of the way is examined at a level that is simple enough for new learners, and comfortable for more experienced programmers.

Topics covered include Additive Synthesis, Frequency Modulation, Sampling, Granular Synthesis, Filters, Compression, Input/Output, MIDI, Analysis and everything else an artist may need to bring their Processing sketches to life.

"Sonifying Processing is a great introduction to sound art in Processing. It's a valuable reference for multimedia artists." - Beads Creator Oliver Bown

Sonifying Processing is available as a free pdf ebook, in a Kindle edition, or in print from Amazon.com.

Downloads

Sonifying Processing The Beads Tutorial

The Beads Library was created by Oliver Bown, and can be downloaded at http://www.beadsproject.net/.

Press

Sonifying Processing on Peter Kirn's wonderful Create Digital Music

The End of My Career at SoundWalk Long Beach This Weekend

This week Erin and I will be down in Long Beach to participate in SoundWalk 2013. The End of My Career will be played at one of the locations.

Office Problems #50 – Forgetting Something Important

I recently supplied the score for the latest episode for the Office Problems web show.

Disconnected, Algorithmic Sound Collages from Web API

I'm pleased to announce the release of Disconnected, an album of algorithmic sound collages generated by pulling sounds from the web.

I prefer to call this album semi-algorithmic because some of the music is purely software-generated, while other pieces are a collaboration between the software and myself. Tracks four and six are purely algorithmic, while the other tracks are a mix of software-generated material and more traditionally composed material.

The software used in the sound collage pieces (1, 3, 4, 6) was inspired by Melissa Schilling's Small World Network Model of Cognitive Insight. Her theory essentially says that moments of cognitive insight, or creativity, occur whenever a connection is made between previously distantly related ideas. In graph theory, these types of connections are called bridges, and they have the effect of bringing entire neighborhoods of ideas closer together.

I applied Schilling's theory to sounds from freesound.org. My software searches for neighborhoods of sounds that are related by aural similarity and stores them in a graph of sounds. These sounds are then connected with more distant sounds via lexical connections from wordnik.com. These lexical connections are bridges, or moments of creativity. This process is detailed in the paper Composing with All Sound Using the FreeSound and Wordnik APIs.

Finally, these sound graphs must be activated to generate sound collages. I used a modified boids algorithm to allow a swarm to move over the sound graph. Sounds were triggered whenever the population on a vertex surpassed a threshold.

Ad Hoc Artificial Intelligence in Algorithmic Music Composition

On October 4, I will be presenting at the 3rd Annual Workshop on Musical Metacreation. I will be presenting a paper on the ways that AI practitioners have used ad hoc methods in algorithmic music programs, and what that means for the field of computational creativity. The paper is titled Implications of Ad Hoc Artificial Intelligence in Music Composition.

This paper is an examination of several well-known applications of artificial intelligence in music generation. The algorithms in EMI, GenJam, WolframTones, and Swarm Music are examined in pursuit of ad hoc modifications. Based on these programs, it is clear that ad hoc modifications occur in most algorithmic music programs. We must keep this in mind when generalizing about computational creativity based on these programs. Ad hoc algorithms model a specific task, rather than a general creative algorithm. The musical metacreation discourse could benefit from the skepticism of the procedural content practitioners at AIIDE.

The workshop is taking place on the North Carolina State University campus in Raleigh, NC. Other presenters include Andie Sigler, Tom Stoll, Arne Eigenfeldt, and fellow NIU alumnus Tony Reimer.

Swarm Intelligence in Music in The Signal Culture Cookbook

The Signal Culture Group just published The Signal Culture Cookbook. I contributed a chapter titled "The Mapping Problem", which deals with issues surrounding swarm intelligence in music and the arts. Swarm intelligence is a naturally non-human mode of intelligent behavior, so it presents some unique problems when being applied to the uniquely human behavior of creating art.

The Signal Culture Cookbook is a collection of techniques and creative practices employed by artists working in the field of media arts. Articles include real-time glitch video processing, direct laser animation on film, transforming your drawing into a fake computer, wi-fi mapping, alternative uses for piezo mics, visualizing earthquakes in real time and using swarm algorithms to compose new musical structures. There's even a great, humorous article on how to use offline technology for enhancing your online sentience – and more!

And here's a quote from the introduction to my chapter.

Some composers have explored the music that arises from mathematical functions, such as fractals. Composers such as myself have tried to use computers not just to imitate the human creative process, but also to simulate the possibility of inhuman creativity. This has involved employing models of intelligence and computation that aren't based on cognition, such as cellular automata, genetic algorithms and the topic of this article, swarm intelligence. The most difficult problem with using any of these systems in music is that they aren't inherently musical. In general, they are inherently unrelated to music. To write music using data from an arbitrary process, the composer must find a way of translating the non-musical data into musical data. The problem of mapping a process from one domain to work in an entirely unrelated domain is called the mapping problem. In this article, the problem is mapping from a virtual swarm to music, however, the problem applies in similar ways to algorithmic art in general. Some algorithms may be easily translated into one type of art or music, while other algorithms may require complex math for even basic art to emerge.

Prelude to the Afternoon of a Faun Remix

This is my synthesizer arrangement of Debussy's Prelude to the Afternoon of a Faun.

Isao Tomita did version with actual analog synthesizers in the 1970s.

Seven Solos Composed by Cellular Automata

I've collected several pieces composed using cellular automata into one package.

This collection of seven solos for any instrument was created by computer programs that simulate cellular automata. A cellular automaton is a mathematical system containing many cellular units that change over time according to a predetermined rule set. The most famous cellular automaton is Conway's Game of Life. Cellular automata such as Conway's Game of Life and the ones used to compose these pieces are capable of generating complex patterns from a very small set of rules. These solos were created by mapping elementary cellular automata to music data. One automaton was mapped to pitch data and a second automaton was mapped to rhythm data. A unique rule set was crafted to generate unique patterns for each piece.

Download the score for Seven Solos Composed by Cellular Automata for any instrument by Evan X. Merz.

Two Duets Composed by Cellular Automata

In the past few days I've completed several programs that compose rather nice notated music using cellular automata. Yesterday I posted seven solos generated by cellular automata. Today I am following up with two duets. Like the solos, these pieces were generated using elementary cellular automata.

All of these pieces look rather naked. In the past I've added tempo, dynamics, and articulations to algorithmic pieces where the computer only generated pitches and durations. Lately I feel like it's best to present the performer with exactly what was generated, and leave the rest up to the performer. So these pieces are a bit more like sketches, in the sense that the performer will fill out some of the details.

Download the score for Two Duets Composed by Cellular Automata for any instruments by Evan X. Merz.

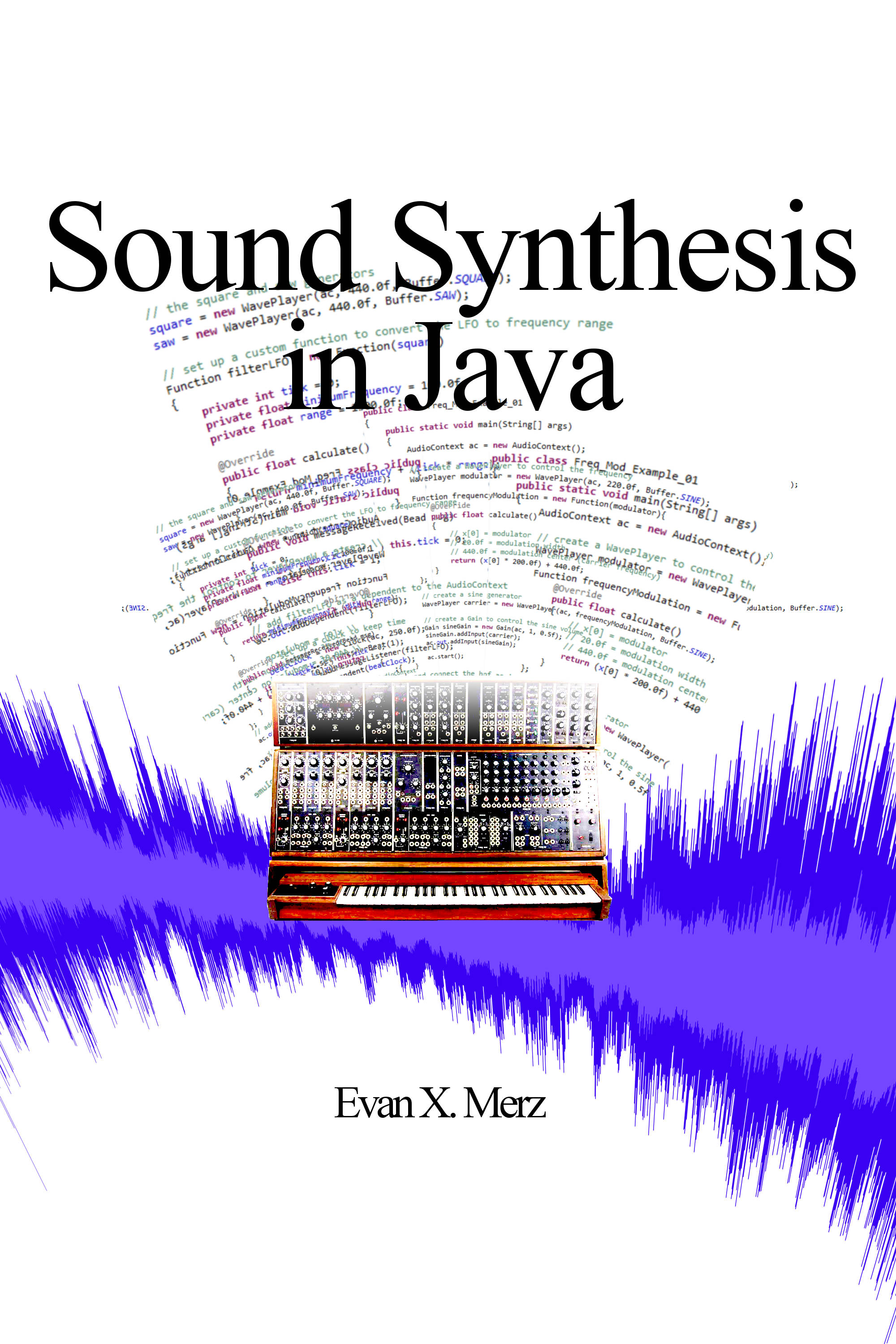

Sound Synthesis in Java

Sound Synthesis in Java introduces sound synthesis concepts using the most widely taught programming language in the world, Java. Using the Beads library, it walks readers through the basics of sound generating programs all the way up through imitations of commercial synthesizers. In eleven chapters the book covers additive synthesis, modulation synthesis, subtractive synthesis, granular synthesis, MIDI keyboard input, rendering to audio files and more. Each chapter includes an explanation of the topic and examples that are as simple as possible so even beginning programmers can follow along. Part two of the book includes six projects that show the reader how to build arpeggiators, imitate an analog synthesizer, and create flowing soundscapes using granular synthesis.

Sonifying Processing is available for free online.

Read Online

Read Sound Synthesis in Java online. The source code is available from links in the text.

Make Explosive Soundscapes with Circular Sound

I've just finished work on a new musical instrument for Android devices. It's called Circular Sound, and it's aimed at people who like noise.

Circular Sound is similar to my other recent mobile instruments in that it combines a sampler with custom digital signal processing, and a unique interface. Sounds can be loaded from a configurable directory on the device, or you can play around with the default sounds, which are from freesound.org. Then they are loaded into spheres that are arranged in a circle on the left half of the screen. The left half of the screen is the source audio mixer, while the right half is used to control effects. The effects include waveshaping, granulation, delay, and modulation.

The goal of Circular Sound is to give a simple access point into generating various types of noise that is related in some way to the source sounds provided by the user.

Download it for free on Google Play and shoot me a comment to let me know if you make something cool with it!

UPDATE: I have been unable to continue maintaining Circular Sound, so it is no longer available.

Granular Synthesis for Android Phones

Granular is a granular synthesizer for Android devices. Play it by dragging your fingers around the waveform for the source audio file. You can upload your own audio files, or just play with the sounds that are distributed with the app.

The horizontal position on the waveform controls the location from which grains will be pulled. The vertical position controls the grain size. The leftmost slider controls the amount of frequency modulation applied to the grains. The middle slider controls the time interval between grains. The rightmost slider controls randomness.

Download Granular from the Google Play Store and start making grainy soundscapes on your phone.

EDIT: I have been unable to continue maintaining this app, so it is no longer available

How to Share an Audio File on Android from Unity/C#

Rendering audio to a file is an important feature of an audio synthesizer, but if the user can't share the file, then it's not very useful. In my second pass on my synthesizers, I'm adding the ability to share rendered audio files using email or text message.

The code for sharing audio files is tricky. You have to tell Unity to generate some Java code that launches something called an Intent. So this code basically instantiates the Java classes for the Intent and the File, then starts the activity for the intent.

Figuring out the code is tough, but you also need to change a setting in your player settings. Specifically, I couldn't get this code to work without Write Access: External (SDCard) enabled in Player Settings. Even if I am writing to internal storage only, I need to tell Unity to request external write access. I'm assuming that the extra privileges are needed for sharing the file.

Here's the code.

public static void ShareAndroid(string path)

{

// create the Android/Java Intent objects

AndroidJavaClass intentClass = new AndroidJavaClass("android.content.Intent");

AndroidJavaObject intentObject = new AndroidJavaObject("android.content.Intent");

// set properties of the intent

intentObject.Call("setAction", intentClass.GetStatic("ACTION_SEND"));

intentObject.Call("setType", "*/*");

//instantiate the class Uri

AndroidJavaClass uriClass = new AndroidJavaClass("android.net.Uri");

// log the attach path

Debug.Log("Attempting to attach file://" + path);

// check if the file exists

AndroidJavaClass fileClass = new AndroidJavaClass("java.io.File");

AndroidJavaObject fileObject = new AndroidJavaObject("java.io.File", path);// Set Image Path Here

//instantiate the object Uri with the parse of the url's file

AndroidJavaObject uriObject = uriClass.CallStatic("parse", "file://" + path);

// call the exists method on the File object

bool fileExist = fileObject.Call("exists");

Debug.Log("File exists: " + fileExist);

// attach the Uri instance to the intent

intentObject.Call("putExtra", intentClass.GetStatic("EXTRA_STREAM"), uriObject);

// instantiate the current activity

AndroidJavaClass unity = new AndroidJavaClass("com.unity3d.player.UnityPlayer");

AndroidJavaObject currentActivity = unity.GetStatic("currentActivity");

// start the new intent - for this to work, you must have Write Access: External (SDCard) enabled in Player Settings!

currentActivity.Call("startActivity", intentObject);

}

Recording In-Game Audio in Unity

Recently I began doing a second pass on my synthesizers in the Google Play store. I think the core of each of those synths is pretty solid, but they are still missing some key features. For example, if you want to record a performance, you must record the output of the headphone jack.

So I just finished writing a class that renders a Unity audio stream to a wave file, and I wanted to share it here.

The class is called AudioRenderer. It's a MonoBehaviour that uses the OnAudioFilterRead method to write chunks of data to a stream. When the performance ends, the Save method is used to save to a canonical wav file.

The full AudioRenderer class is pasted here. As written it will only work on 16bit/44kHz audio streams, but it should be easily adaptable.

using UnityEngine;

using System;

using System.IO;

public class AudioRenderer : MonoBehaviour

{

#region Fields, Properties, and Inner Classes

// constants for the wave file header

private const int HEADER_SIZE = 44;

private const short BITS_PER_SAMPLE = 16;

private const int SAMPLE_RATE = 44100;

// the number of audio channels in the output file

private int channels = 2;

// the audio stream instance

private MemoryStream outputStream;

private BinaryWriter outputWriter;

// should this object be rendering to the output stream?

public bool Rendering = false;

/// The status of a render

public enum Status

{

UNKNOWN,

SUCCESS,

FAIL,

ASYNC

}

/// The result of a render.

public class Result

{

public Status State;

public string Message;

public Result(Status newState = Status.UNKNOWN, string newMessage = "")

{

this.State = newState;

this.Message = newMessage;

}

}

#endregion

public AudioRenderer()

{

this.Clear();

}

// reset the renderer

public void Clear()

{

this.outputStream = new MemoryStream();

this.outputWriter = new BinaryWriter(outputStream);

}

/// Write a chunk of data to the output stream.

public void Write(float[] audioData)

{

// Convert numeric audio data to bytes

for (int i = 0; i < audioData.Length; i++)

{

// write the short to the stream

this.outputWriter.Write((short)(audioData[i] * (float)Int16.MaxValue));

}

}

// write the incoming audio to the output string

void OnAudioFilterRead(float[] data, int channels)

{

if( this.Rendering )

{

// store the number of channels we are rendering

this.channels = channels;

// store the data stream

this.Write(data);

}

}

#region File I/O

public AudioRenderer.Result Save(string filename)

{

Result result = new AudioRenderer.Result();

if (outputStream.Length > 0)

{

// add a header to the file so we can send it to the SoundPlayer

this.AddHeader();

// if a filename was passed in

if (filename.Length > 0)

{

// Save to a file. Print a warning if overwriting a file.

if (File.Exists(filename))

Debug.LogWarning("Overwriting " + filename + "...");

// reset the stream pointer to the beginning of the stream

outputStream.Position = 0;

// write the stream to a file

FileStream fs = File.OpenWrite(filename);

this.outputStream.WriteTo(fs);

fs.Close();

// for debugging only

Debug.Log("Finished saving to " + filename + ".");

}

result.State = Status.SUCCESS;

}

else

{

Debug.LogWarning("There is no audio data to save!");

result.State = Status.FAIL;

result.Message = "There is no audio data to save!";

}

return result;

}

/// This generates a simple header for a canonical wave file,

/// which is the simplest practical audio file format. It

/// writes the header and the audio file to a new stream, then

/// moves the reference to that stream.

///

/// See this page for details on canonical wave files:

/// http://www.lightlink.com/tjweber/StripWav/Canon.html

private void AddHeader()

{

// reset the output stream

outputStream.Position = 0;

// calculate the number of samples in the data chunk

long numberOfSamples = outputStream.Length / (BITS_PER_SAMPLE / 8);

// create a new MemoryStream that will have both the audio data AND the header

MemoryStream newOutputStream = new MemoryStream();

BinaryWriter writer = new BinaryWriter(newOutputStream);

writer.Write(0x46464952); // "RIFF" in ASCII

// write the number of bytes in the entire file

writer.Write((int)(HEADER_SIZE + (numberOfSamples * BITS_PER_SAMPLE * channels / 8)) - 8);

writer.Write(0x45564157); // "WAVE" in ASCII

writer.Write(0x20746d66); // "fmt " in ASCII

writer.Write(16);

// write the format tag. 1 = PCM

writer.Write((short)1);

// write the number of channels.

writer.Write((short)channels);

// write the sample rate. 44100 in this case. The number of audio samples per second

writer.Write(SAMPLE_RATE);

writer.Write(SAMPLE_RATE * channels * (BITS_PER_SAMPLE / 8));

writer.Write((short)(channels * (BITS_PER_SAMPLE / 8)));

// 16 bits per sample

writer.Write(BITS_PER_SAMPLE);

// "data" in ASCII. Start the data chunk.

writer.Write(0x61746164);

// write the number of bytes in the data portion

writer.Write((int)(numberOfSamples * BITS_PER_SAMPLE * channels / 8));

// copy over the actual audio data

this.outputStream.WriteTo(newOutputStream);

// move the reference to the new stream

this.outputStream = newOutputStream;

}

#endregion

}

I love Pandora, but where is the discovery?

I have been a loyal Pandora subscriber since the month they started offering subscriptions. I love the service. I will continue subscribing forever, even if it's only to keep my perfectly tuned Christmas music station.

But Pandora is not serving its audience very well, and that annoys me.

I probably listen to Pandora over five hours a day on each work day, and probably an hour or two on days off. When I tell someone I use Pandora, they inevitably ask me, "why don't you just use Spotify?" More and more, I feel like they have a point.

In the past, I have preferred Pandora because it enabled discovery. It allowed me to create stations that would play music that I liked, but I had never heard. As a person who has spent decades of his life listening to and studying music, one of the main things I like about a piece of music is that I've never heard it before. In the past two years or so, I feel like this aspect of Pandora has dwindled or disappeared.

More and more, I feel like my Pandora stations primarily play the tracks that I have already voted for. Admittedly, some of my stations have been around for over a decade, so I have voted for a lot of tracks. When I vote for a track, however, it isn't an indication that I want to hear that track every time I turn on that station. A vote is an indication that I want to hear tracks that are similar to that track.

But this is just too rare lately on Pandora. I hear the same Ellie Goulding tracks that I voted for last year. I hear the same Glitch Mob tracks that I've heard for the past six years. I still like that music, but I would prefer to hear something else. Why not play another track off the album that I voted for? Why play the same single track over and over?

"But why not click the ‘Add Variety' button?" The ‘Add Variety' button adds a new seed to that station. I don't want to change the type of music played by the station, I simply want it to play OTHER music that falls within my already-indicated preferences.

What really irritates me, is that this doesn't seem like a hard feature to implement. Why can't a user tune the amount of new music they hear? Why can't we have a slider that we can control with our mood? If the slider is set to 1.0, then we are in full discovery mode. Every track played will be one that we haven't voted on. If the slider is set to 0.0, then every track played will be one that we HAVE voted on. In this way, Pandora could act like Spotify for users who like Spotify, and for people like me, it can act as the best shuffle on the planet.

As a programmer who has worked with large datasets, search tools like ElasticSearch, and written lots of web applications, I know that this isn't a difficult change. It might require one schema change, and less than ten lines of new code. But it should be implementable in under a week. Design and tesging might take longer.

And seriously, Pandora, I will implement this for you if you are that desperate. My current employer will loan me out, and even without knowing your code base, I could get this done in a month.

So come on, Pandora. Serve your audience. Stop making me explain why I prefer Pandora over Spotify. Add a discovery slider. Today.

I paid my dues to see David Gray live

One of the reasons I am fascinated with both computer science and music is that each is a bit like magic. Each has invisible power to make change.

Yesterday, my daughter woke up with the flu. Actually, we found out today that she has croup, which is apparently going around her school. So Erin stayed home with her, while I went to work. But we also had to cancel our plans for the evening. Instead of going to the David Gray concert together, I would go alone.

At work, I was stuck in a meeting that seemed like it would never end. During this meeting, I got a headache that kept getting worse and worse. When I rubbed my head, I could feel my temperature rising. I could tell that I was getting sick too. The meeting dragged on for four hours, but I pushed through it.

By the end of the day, I was exhausted and feverish. I had driven to work, because I was still going to make it to the concert, even if I was going alone. But in Palo Alto, you have to do a dance with the parking authority if you want to park for free. You have to move your car every two hours, from one colored zone to another. I left work a little early because I knew there would be traffic on the drive, but when I found my car, there was a bright orange envelope on the windshield. I owe Palo Alto $53.

At that point I had paid $70 for the tickets, plus $53 for the parking ticket, so I had invested $123 to see David Gray. The parking ticket only steeled my resolve. I was going to see him come hell or high water.

And this is all sort of silly, because I don't even like David Gray that much. Mostly, I have a deep sense of nostalgia for his one hit album that came out right before I went to college. I listened to it a lot in college. At the time, he was the only person I knew of who was doing singer-songwriter-plus-drum-machine really well. When I found out that Erin couldn't come to the concert, I tried to explain this to my younger coworkers who I invited to the concert. They were nonplussed to say the least. A singer-songwriter with a drum machine really doesn't sound very compelling today. It sounds practically commonplace. But nobody had quite figured out the formula back in 1998. So David Gray felt really fresh to me at the time.

My point is, I'm not a David Gray fanboy. I just respect the amount of time I spent listening to him when I was younger. Unfortunately, this is not enough to convince others to drive all the way up to Oakland for a concert.

The drive was hellish. If you have ever commuted from San Jose to/from Oakland during rush hour, then you know how this goes. The Greek Theater is only 40 miles from my workplace. The best route that Google could calculate took two and a half hours. I was in traffic for every minute of that drive, with a rising fever. It was extremely painful, and even though I left work fifteeen minutes early, I still arrived 10 minutes late.

But when I pulled up to the parking garage, things seemed to turn around. By this point I had a very high fever, the sun had gone down, and it was raining. So I couldn't see the "Full" sign on the parking garage until I had already pulled in using the wrong lane. Everyone was continuing on to the next lot. At first I tried to back out of the garage, but then I realized that it wasn't really full. So I pulled into a spot. I'd take my chances.

Then I stepped out into the rain, and started running to the theater. I could hear the music pouring over the hills. I saw a man standing in the rain, asking for extra tickets. I knew he was just going to scalp them, so I almost walked by, but fuck it, who cares. I gave him my extra ticket.

Then I ran up the steps, and breezed through security. I climbed to the top of the hill, and the music hit me.

That's the moment when you feel the true power of music. I was all alone and feverish, in the rain after a long day of work and an awful drive to the theater, yet the music seemed to heal me. I could feel myself recovering as the sound washed over me.

I didn't really talk to anyone. I listened to the music, and watched from the top of the grass. David Gray has a good band, and he has a good audience rapport. Even though his music isn't as fresh today as it was in 1998, it still changed me last night.

I bought a shirt, and felt a lot better on the drive home.

Book Review: Life of a Song

I recently had the chance to read Life of a Song: The fascinating stories behind 50 of the worlds best-loved songs. It's a concise collection of fifty Life of a Song articles from the Financial Times. As I rarely have a reason to visit the FT website, and I only occasionally catch the Life of a Song podcast, the book was a great opportunity to catch up on what I'd missed. Regular readers may find nothing new in the book, but for pop fans and die-hard listeners, the short collection is definitely worth a read.

The book consists of fifty articles from the regular Life of a Song column collected into book form. Each article takes on a different, well-loved tune from twentieth century popular music. Songs covered include ‘My Way', ‘Midnight Train to Georgia', ‘1999', ‘La Vie en Rose', and ‘This Land is Your Land'. There are only a few songs in the list that I didn't know off the top of my head, including ‘Song to the Siren', and ‘Rocket 88'. The articles usually include some remarks about the songwriter, often quoting them about their creation. Then they cover the journey from composition to hit recording, and usually mention other interpretations that followed the hit.

Each article appears to be less than 1000 words. As you might expect, that's a lot to cover in that much room. So each article is pretty topical, relating a single anecdote about it, and only touching on the rest. For instance, in the article about ‘Like a Rolling Stone', the author relates the recording process that shaped the final sound.

On take four of the remake, serendipity strikes. Session guitarist Al Kooper, 21, a friend of the band, walks in holding his guitar, hoping to join in. He is deemed surplus to requirements, but Dylan decides he wants an organ in addition to piano, and Kooper volunteers to fill in. He improvises his part, as he would later recall, ‘like a little kid fumbling in the dark for a light switch'. And suddenly the song turns into the tumbling, cascading version that will become the finished article.

There's two pieces of information that you need to know about this book in order to enjoy it.

- It is a collection of short articles by many contributors.

- Those writers are almost entirely arts journalists, rather than trained musicians.

This book was written by a lot of authors. I counted fourteen contributors, each of whom appears to be an English journalist. This can lead to the book feeling somewhat disjointed. Each author is comfortable talking about their own domain of the music industry. Some interpret the lyrics, others relate interviews with creators, others pick up on business maneuvers behind the scenes.

In the introduction, David Chael and Jan Dalley write that the book "is not about singers, or stars, or chart success – although of course they come into the story. It is about the music itself". If you are a musician, this may leave you expecting musical analysis, lyrical breakdowns, or at least comparisons to similar songs. The book "is about music" in as much as it tells stories about musicians, but it is strictly an outsiders perspective. There's no illusion that the writers were part of the culture of the song, or involved themselves with the people in the story. A reader shouldn't expect that in a collection such as this.

My favorite article is the one about ‘Midnight Train to Georgia'. That song has so much soul, that it surprised me to learn that the original title, given to the tune by its white songwriter, was ‘Midnight Plane to Houston'.

The soul singer Cissy Houston… decided to record its first cover version… But the title irked. It wasn't the collision of Houstons – singer and subject – that bothered her, but one of authenticity. If she was going to sing this song, she had to feel it. And, she later said, ‘My people are originally from Georgia and they didn't take planes to Houston or anywhere else. They took trains.'

Ultimately, Life of a Song is a great book to read on the way to and from work, or to sit in your book bin next to your favorite chair. It's a book that can be read in lots of small chunks, and each chunk reveals a little bit more about a song than the recording.

Now if you don't mind, I need to catch a plane to Houston.

SoundCloud, I love you, but you're terrible

I finally started using SoundCloud for a new electro project called Fynix. I casually used it in the past under my own name, in order to share WIP tracks, or just odd stuff that didn't fit on bandcamp. But I never used it seriously until recently. Now I am using it every day, and trying to connect with other artists. I am remixing one track a week, listening to everything on The Upload, and liking/commenting as much as I can.

SoundCloud is the best social network for musicians right now. But it still has a terrible identity crisis. Most of the services seem to be aimed at listeners, or aimed at nobody in particular.

So in this post, I'm going to vent about SoundCloud. It's a good platform, but with a few changes it could be great.

1. I am an artist. Stop treating me like a listener.

Is it really that difficult for you to recognize that I am a musician, and not a listener? I've uploaded 15 tracks. It seems like a pretty simple conditional check to me. So why is my home feed cluttered up with reposts? Why can't I easily find the new tracks by my friends?

This is the core underlying problem with SoundCloud. It has two distinct types of users, and yet it treats all users the same.

2. Your "Who to Follow" recommendations suck. They REALLY suck.

I've basically stopped checking "Who to Follow" even though I want to connect with as many musicians as possible. The recommendations seem arbitrary and just plain stupid.

The main problem is that, as a musician, I want to follow other musicians. I want to follow people who will interact with me, and who will promote my work as much as I promote theirs. Yet, the "Who to Follow" list is full of seemingly random people.

Is this person from the same city as me? No. Do they follow lots of people / will they follow back? No. Are they working in a genre similar to mine? No. Do they like and comment on lots of tracks? No.

So why the heck would I want to follow them?

3. Where are my friends latest tracks?

This last one is just infuriating. When I log in, I want to see the latest tracks posted by my friends. So I go to my homescreen, and it is pure luck if I can find something posted by someone I actually talk to on SoundCloud. It's all reposts. Even if I unfollow all the huge repost accounts, I am stuck looking at reposts by my friends, rather than their new tracks.

Okay, so let's click the dropdown and go to the list of users I am "following". Are they sorted by recent activity? No. They are sorted by the order in which I followed them. To find out if they have new tracks, I must click on them individually and check their profiles. Because that is really practical.

Okay, so maybe there's a playlist of my friends tracks on the Discover page? Nope. It's all a random collection of garbage.

As far as I can tell, there is no way for me to listen to my friends' recent tracks. This discourages real interactions.

Ultimately, the problem is data, and intelligence. SoundCloud has none. You could blame design for these problems. The website shows a lack of direction, as if committees are leading the product in lots of different directions. SoundCloud seems to want to focus on listeners, to compete in the same space as Spotify.

But even if that's the case, it should be trivial to see that I don't use the website like a regular listener. I use it like a musician. I want to connect and interact with other musicians.

And this is such a trivial data/analytics problem that I can only think that they aren't led by data at all. Maybe this is just what I see because I lead our data team, but it seems apparent to me that data is either not used, or used poorly in all these features.

For instance, shouldn't the "Who to Follow" list be based on who I have followed in the past? I've followed lots of people who make jazz/electro music, yet no jazz/electro artists are in my "Who to Follow" list. I follow people who like and comment on my tracks, yet I am told to follow people who follow 12 people and have never posted a comment.

The most disappointing thing is that none of this is hard.

4. Oh yeah, and your browser detection sucks.

When I am browsing your site on my tablet, I do not want to use the app. I do not want your very limited mobile site. I just want the regular site (and yes, I know I can get it with a few extra clicks, but it should be the default).

Getting Lost in Pop

I've spent so much of my life making legitimately weird music. See my algorithmic music on bandcamp for that. So it's only natural that at some point I would go completely in the opposite direction, and that time is now.

Lately I have just been loving pop music. I love listening to it. I love making it. It's just fun. When making music in a pop style it's really easy to find collaborators. After all, most musicians are trying to make "pop" in one way or another. And the collaborations usually run along predefined lines. e.g. "I'll make the music, and you do the vocals."

So that's what I've been doing lately. Tons of collaborations on tons of fun pop songs. Here's my latest on Spotify with Drizztopher Walken.

And these collaborations push me to explore more of my own ideas too. My daughters started listening to Galantis recently, a group that I fell in love with a few years back. And those two things have pushed me to make fun, danceable pop, and to even try to include my daughters (who are aged 2 and 4). Here's my latest on SoundCloud with special appearances by both of my daughters.

So what does this say about me? Am I going to make pop for the rest of my life? Do I have bad taste in music? I don't know. For now, I know that I'm having a lot of fun making pop.

Remember Nina Simone

I recently read But What If We're Wrong?: Thinking About the Present As If It Were the Past by Chuck Klosterman. The premise of the book is to predict the future by looking at how past predictions were wrong, and how they could have been right. As usual Chuck Klosterman takes on sports and culture, with some random asides about The Real World.

The most compelling section was where he tried to predict the future of Rock. He looked back at music of the past, pointing out that most people remember one person from each era of music. So most people know Bach from the baroque, Mozart from the classical, and Beethoven from the romantic era. He made the interesting point that surviving history is about being recognized by people who know nothing about the subject, rather than by people who are specialists. Specialists can name several playwrights from the 1500s, for example, but most of us can only name Shakespeare.

In the domain of rock, he said The Beatles are the logical choice to represent rock music. Still, he pointed out that it's easier to remember a single individual whose story relates to the art itself. So Bob Dylan or Elvis Presley might emerge rather than The Beatles. Then he pointed to arguments for various rock musicians and why they might turn out to be true.

It was all very interesting, but it just got me thinking about jazz. In one hundred years, who might emerge to represent all of jazz?

The obvious choice is Duke Ellington. His career spanned most of jazz, even though he retained his own distinct style throughout. He was influential as both a composer and a band leader.

Another choice might be Louis Armstrong. He invented the jazz solo as we know it today, and he performed a few of the most popular jazz tracks ever recorded.

After them, the waters get more murky. Benny Goodman made jazz popular music. Miles Davis sold more records than even Louis Armstrong. Marian McPartland brought jazz into the home long after most people had given up on it. Heck, maybe Johnny Costa will be remembered for his role as the music director of Mr. Rogers Neighborhood.

I think that it will probably be a composer. Prior to the late 19th century, the only recorded history of music was written down on paper. This is why we remember Mozart the composer, rather than the people who performed his music. We still tend to think people who write music are more important than people who perform it, even when we have recordings of great performers. That's a big reason why it will probably be Duke Ellington who is ultimately remembered.

But this strikes me as wrong. Jazz is the first music that is truly a recorded music throughout its entire history. If humanity is around in one hundred years, then jazz recordings will still exist. So couldn't it, perhaps, be the greatest performer who survives?

Also the focus of jazz is on the improviser. Jazz is a kind of folk music, where the performer subjugates the composition to his own interpretation. So perhaps the greatest interpreter of jazz will be remembered.

In either case, it has to be Nina Simone. No other performer expressed the full range of human emotions in a single performance. Even Louis Armstrong tended toward jubilation in his performances. He never reached the depths that Nina Simone explored in I Loves You Porgy or Willow Weep for Me.

There are two arguments against Nina Simone. First, that she was closer to a pop singer than a jazz singer. Second, that she isn't known for her compositions.

Nina Simone wasn't a pop singer. That's what we would call her today because her category no longer exists. She was a cabaret singer. When she was unfairly rejected for a scholarship to study classical piano at The Curtis Institute in Philadelphia, she supported herself by playing popular music in bars and restaurants. She would sing and play whatever songs were on the radio. It was a very demanding job, and playing those songs night after night is what molded her into the greatest interpreter both as a vocalist and a pianist.

To the second objection, that she isn't remembered for her compositions, I can only point to her unforgettable performance of Mississippi Goddamn, a composition of her own. With a cheerful piano accompaniment, and a melody that pushes the piece forward, she managed to write and perform a song that defines courage. The music is a beautiful, confident show tune that carries lyrics about one of the worst tragedies in American history. The effect is a better expression of the black experience in this country than any other performance in jazz.

So please, remember Nina Simone.

Here's my playlist of Nina Simone's best tracks to help her cause.

Encores 2 by Nils Frahm, and the Joy of Live Music

It's difficult to unpack my feelings about Nils Frahm as an artist. I remember, a few years back, hearing rave reviews about one of his albums. I can't recall which album it was. I listened to it, and it reminded me of the type of "scholarly" music that is being produced at every college music department with an electronic music program in the country. It didn't strike me as anything special.

Then I listened to The Noise Pop Podcast, or maybe it was Switched on Pop, and one of the hosts was overwhelmed by Frahm's 2018 album All Melody. So I listened to that, and I felt about the same.

Maybe it was a sign that I was cynical, jaded, or just plain old. Or maybe Frahm and I went through similar music educations. Maybe he was the most successful version of all the dudes making quiet, semi-ambient electronic music at all the schools I attended. Although, glancing through his wikipedia, I don't see any references to universities. So maybe that isn't the case either.

But as of last year, my general feeling toward Frahm was a qualified "meh."

Still, when I saw he was playing at a club that was walking distance from my house, I had to buy tickets. I invited my friend Brian along, and we caught him at The Ritz in San Jose.

It was at that concert that my feelings toward Frahm changed.

The Ritz is a fantastically intimate venue. It's compact enough to reach out and touch the performer from the front of the audience. It's small enough to only hold maybe two hundred people standing.

Frahm had an absolutely massive setup. He had several antique organs, numerous synthesizers, and enormous old fashioned amps the size of small cars.

But he had no band and there was no opening act. He came out alone. He performed alone, and the show was about him and his music. It was pure, and almost sacred in its tone at first. But as it went on he got more and more loose.

Frahm has a charming on-stage personality. Between each track he talked about his music and his writing process. He opened up his soul a little bit, and opened up about his music a lot. He got more talkative as the show progressed, until by the end he was talking about chord progressions and audience expectations. For people with a little bit of musical training, which I suspect was most of the audience, it was a magical night where we jointly worshipped at the altar of music.

On January 25th, 2019, Frahm released a new EP titled Encores 2. Does it feel like his show? Would his magical show change the way I perceived his music?

No. The album is more slow, quiet sonic meditations that owe a great debt to Brian Eno. It's still an enjoyable album to listen to while at work, or on the train, or perhaps while making dinner.

But if you get the chance, you should definitely catch him live.

Jazz from California

Jazz isn't necessarily the first thing you think of, when you think of California. You might think of surfing, Dick Dale, the Golden Gate Bridge, or Hollywood. But California has a rich history in Jazz.

Don't forget that California made Benny Goodman a star, and made swing music into popular music. After the war, dozens of amazing soloists were made in California, including Dexter Gordon, Lester Young, Art Pepper, and Eric Dolphy. Household names like Dick Brubeck, Stan Getz, and Cal Tjader all started their careers in California.

I put together this playlist to celebrate the great jazzers from CA. Listen to the end to catch some more modern artists who stand tall with the rest of these greats.

Drowning in 2020

What a year.

I remember the year we had our second child, Leta. That was a difficult year. Having a two year old and a newborn in the house was not easy. Ultimately, I failed that test in many ways. I don't think I failed as a father, but I did a lot of damage to myself in order to get through the year.

I changed jobs shortly after Leta was born, but I was never able to succeed at that job. The anxiety from the birth and changing jobs just took over my life. I developed ulcers and other health issues that took me years to beat.

This year was harder. I know I'm not alone when I say that I felt like I was drowning. With the virus ravaging the country and a president who insisted on calling it a hoax or downplaying its effects, it felt like the world was closing in on me.

And that's what produced this album. I wrote it in the first few months of lockdown, when there was much false hope, but no real hope for an end to the unfolding tragedy.

This music differs from most of my other music in many ways. For one, there's only one collaborator on it, unlike my other recent work which has featured many other collaborators. Also, it's much darker. It's a bleak, synthesized hellscape that chokes off the light. It's violent and dark and lonely.

Just like 2020.

I'm proud to say that I didn't fail this year. As the world was melting down around me, I didn't drown. I swam. I was promoted at my job. I took a leadership position in my community. I volunteered at the local library. I made things. And I didn't come out with any new health problems.

But still, the year mostly felt like I was drowning.

Thanks for listening!

Glitching Images in Processing

This summer I'm going to release a new album of solo electronic music that is heavily influenced by EDM and classic rock. For the past few weeks I've been trying to figure out what to do about the art on the new album.

The album is called "FYNIX Fights Back" so I decided to use images of animals fighting, but I didn't just want to pull up generic images. I wanted to add my own special touch to each image. So I pulled out a tool that I haven't used in years: the Processing programming language.

Processing is great for simple algorithmic art. In this case I wanted to glitch some images interactively, but I wasn't exactly sure what I wanted to do.

So I just started experimenting. I played with colors and shapes and randomness. I like to derive randomess based on mouse movement. The more the mouse moves, the more randomness is imparted to whatever the user is doing.

I added image glitching based on mouse speed. The quicker the cursor moves, the more random are the generated shapes, and the more they are offset from their source position in the original image.

Here's the end result.

Here's the source code. Make it even better.

import java.awt.event.KeyEvent;

// relationship between image size and canvas size

float scalingFactor = 2.0;

// image from unsplash.com

String imageFilename = "YOUR_FILE_IN_DATA_FOLDER.jpg";

// image container

PImage img;

PImage scaledImage;

int minimumVelocity = 40;

int velocityFactorDivisor = 5; // the larger this is, the more vertices you will get

int velocityFactor = 1; // this will be overridden below

float minimumImageSize = 10.0f;

boolean firstDraw = true;

int currentImage = 1;

void settings() {

// load the source image

img = loadImage(imageFilename);

// load the pixel colors into the pixels array

img.loadPixels();

// create a canvas that is proportional to the selected image

size((int)(img.width / scalingFactor), (int)(img.height / scalingFactor));

// scale the image for the window size

scaledImage = loadImage(imageFilename);

scaledImage.resize(width, height);

// override velocityFactor

velocityFactor = (int)(width / velocityFactorDivisor);

}

void setup() {

// disable lines

noStroke();

}

void keyPressed() {

if(keyCode == KeyEvent.VK_R) {

firstDraw = true; // if the user presses ENTER, then reset

}

}

void draw() {

if(firstDraw) {

image(scaledImage, 0, 0);

firstDraw = false;

}

// right click to render to image file

if(mousePressed && mouseButton == RIGHT) {

save(imageFilename.replace(".jpg", "") + "_render_" + currentImage + ".tga");

currentImage++;

}

if(mousePressed && mouseButton == LEFT && mouseX >= 0 && mouseX < width && mouseY >= 0 && mouseY < height) {

int velocityX = minimumVelocity + (3 * velocity(mouseX, pmouseX, width));

int velocityY = minimumVelocity + (3 * velocity(mouseY, pmouseY, height));

color c = img.pixels[mousePositionToPixelCoordinate(mouseX, mouseY)];

int vertexCount = ((3 * velocityFactor) + velocityX + velocityY) / velocityFactor;

int minimumX = mouseX - (velocityX / 2);

int maximumX = mouseX + (velocityX / 2);

int minimumY = mouseY - (velocityY / 2);

int maximumY = mouseY + (velocityY / 2);

PGraphics pg = createGraphics(maximumX - minimumX, maximumY - minimumY);

pg.beginDraw();

pg.noStroke();

pg.fill(c);

// first draw a shape into the buffer

pg.beginShape();

for(int i = 0; i < vertexCount; i++) {

pg.vertex(random(0, pg.width), random(0, pg.height));

}

pg.endShape();

pg.endDraw();

pg.loadPixels();

// then copy image pixels into the shape

// get the upper left coordinate in the source image

int startingCoordinateInSourceImage = mousePositionToPixelCoordinate(minimumX, minimumY);

// get the width of the source image

int sourceImageWidth = (int)(img.width);

// set the offset from the source image

int offsetX = velocity(mouseX, pmouseX, width);

int offsetY = velocity(mouseY, pmouseY, height);

// ensure that the offset doesn't go off the canvas

if(mouseX > width / 2) offsetX *= -1;

if(mouseY > height / 2) offsetY *= -1;

for(int y = 0; y < pg.height; y++) {

for(int x = 0; x < pg.width; x++) {

// calculate the coordinate in the destination image

int newImageY = y * pg.width;

int newImageX = x;

int newImageCoordinate = newImageX + newImageY;

// calculate the location in the source image

//int sourceImageCoordinate = (int)(startingCoordinateInSourceImage + (x * scalingFactor) + ((y * scalingFactor) * sourceImageWidth));

//int sourceImageCoordinate = (int)(startingCoordinateInSourceImage + ((x + offsetX) * scalingFactor) + (((y + offsetY) * scalingFactor) * sourceImageWidth));

int sourceImageX = (int)(((x + offsetX) * scalingFactor));

int sourceImageY = (int)((((y + offsetY) * scalingFactor) * sourceImageWidth));

int sourceImageCoordinate = (int)(startingCoordinateInSourceImage + sourceImageX + sourceImageY);

// ensure the calculated coordinates are within bounds

if(newImageCoordinate > 0 && newImageCoordinate < pg.pixels.length

&& sourceImageCoordinate > 0 && sourceImageCoordinate < img.pixels.length

&& pg.pixels[newImageCoordinate] == c) {

pg.pixels[newImageCoordinate] = img.pixels[sourceImageCoordinate];

}

}

}

image(pg, minimumX, minimumY);

}

}

// convert the mouse position to a coordinate within the source image

int mousePositionToPixelCoordinate(int mouseX, int mouseY) {

return (int)(mouseX * scalingFactor) + (int)((mouseY * scalingFactor) * img.width);

}

// This sort of calculates mouse velocity as relative to canvas size

int velocity(float x1, float x2, float size) {

int val = (int)(Math.abs((x1 - x2) / size) * size);

return val;

}

How to render synchronous audio and video in Processing in 2021

About a decade ago I wrote a blog post about rendering synchronous audio and video in processing. I posted it on my now-defunct blog, computermusicblog.com. Recently, I searched for the same topic, and found that my old post was one of the top hits, but my old blog was gone.

So in this post I want to give searchers an updated guide for rendering synchronous audio and video in processing.

It's still a headache to render synchronous audio and video in Processing, but with the technique here you should be able to copy my work and create a simple 2-click process that will get you the results you want in under 100 lines of code.

Prerequisites

You must install Processing, Minim, VideoExport, and ffmpeg on your computer. Processing can be installed from processing.org/. Minim and VideoExport are Processing libraries that you can add via Processing menus (Sketch > Import Library > Add Library). You must add ffmpeg to your path. Google how to do that.

The final, crappy prerequisite for this particular tutorial is that you must be working with a pre-rendered wav file. In other words, this will work for generating Processing visuals that are based on an audio file, but not for Processing sketches that synthesize video and audio at the same time.

Overview

Here's what the overall process looks like.

- Run the Processing sketch. Press q to quit and render the video file.

- Run ffmpeg to combine the source audio file with the rendered video.

Source Code

Without further ado, here's the source. This code is a simple audio visualizer that paints the waveform over a background image. Notice the ffmpeg instructions in the long comment at the top.

/*

This is a basic audio visualizer created using Processing.

Press q to quit and render the video.

For more information about Minim, see http://code.compartmental.net/tools/minim/quickstart/

For more information about VideoExport, see https://timrodenbroeker.de/processing-tutorial-video-export/

Use ffmpeg to combine the source audio with the rendered video.

See https://superuser.com/questions/277642/how-to-merge-audio-and-video-file-in-ffmpeg

The command will look something like this:

ffmpeg -i render.mp4 -i data/audio.wav -c:v copy -c:a aac -shortest output.mp4

I prefer to add ffmpeg to my path (google how to do this), then put the above command

into a batch file.

*/

// Minim for playing audio files

import ddf.minim.*;

// VideoExport for rendering videos

import com.hamoid.*;

// Audio related objects

Minim minim;

AudioPlayer song;

String audioFile = "audio.wav"; // The filename for your music. Must be a 16 bit wav file. Use Audacity to convert.

// image related objects

float scaleFactor = 0.25f; // Multiplied by the image size to set the canvas size. Changing this is how you change the resolution of the sketch.

int middleY = 0; // this will be overridden in setup

PImage background; // the background image

String imageFile = "background.jpg"; // The filename for your background image. The file must be present in the data folder for your sketch.

// video related objects

int frameRate = 24; // This framerate MUST be achievable by your computer. Consider lowering the resolution.

VideoExport videoExport;

public void settings() {

background = loadImage(imageFile);

// set the size of the canvas window based on the loaded image

size((int)(background.width * scaleFactor), (int)(background.height * scaleFactor));

}

void setup() {

frameRate(frameRate);

videoExport = new VideoExport(this, "render.mp4");

videoExport.setFrameRate(frameRate);

videoExport.startMovie();

minim = new Minim(this);

// the second param sets the buffer size to the width of the canvas

song = minim.loadFile(audioFile, width);

middleY = height / 2;

if(song != null) {

song.play();

}

fill(255);

stroke(255);

strokeWeight(2);

// tell Processing to draw images semi-transparent

tint(255, 255, 255, 80);

}

void draw() {

image(background, 0, 0, width, height);

for(int i = 0; i < song.bufferSize() - 1; i++) {

line(i, middleY + (song.mix.get(i) * middleY), i+1, middleY + (song.mix.get(i+1) * middleY));

}

videoExport.saveFrame(); // render a video frame

}

void keyPressed() {

if (key == 'q') {

videoExport.endMovie(); // Render a silent mp4 video.

exit();

}

}

Composing with All Sound

I sometimes get asked about my dissertation, so I wanted to write a blog post to explain it. In this post, I describe the work I did for my dissertation, which brought together web APIs and a network model of creativity.

Composing with all sound

When I was in graduate school I was somewhat obsessed with the idea of composing with all sound. When I say "composing with all sound" I don't mean composing with a lot of sounds. I mean literally all sound that exists. Composing with all sounds that have ever existed is literally impossible, but the internet gives us a treasure trove of sound.

So I looked at the largest collections of sound on the internet, and the best that I found in 2011 was the website freesound.org/. Freesound is great for several reasons.

- The library is absolutely massive and constantly growing

- They have a free to use API

- Users can upload tags and descriptions of the sounds

- Freesound analyzes the sounds and gives access to those descriptors via the API

A model of creativity

Once I had a source for sounds, all I needed was a way to connect them. Neural network research was blossoming in 2011, so I tried to find a neural model that I could connect to the data on Freesound. That's when I found Melissa Schilling's network model of cognitive insight.

Schilling's theory essentially sees ideas as networks in the brain. Ideas are connected by various relationships. Ideas can look alike, sound alike, share a similar space, be described by similar words, and so on. In Schilling's model, cognitive insight, or creativity, occurs when two formerly disparate networks of ideas are connected using a bridge.

So to compose with all the sounds on Freesound, all I needed to do was to organize sounds into networks, then find new ways to connect them. But how could I organize sounds into networks, and what would a new connection look like?

The wordnik API

I realized that I could make lexical networks of sounds. The tags on sounds on Freesound give us a way to connect sounds. For instance, we could find all sounds that have the tag "scream" and form them into one network.

To make creative jumps, I had to bring in a new data source. After all, the sounds that share a tag are already connected.

That's when I incorporated The Wordnik API. Wordnik is an incredibly complete dictionary, thesaurus, and encyclopedia all wrapped into one. And best of all, they expost it using a fast and affordable API.

Composing with all sound using Freesound, a network model of creativity, and lexical relationships

So the final algorithm looks something like this, although there are many ways to vary it.

- Start with a search term provided by a user

- Build a network of sounds with that search term

- Use Wordnik to find related words

- Build a network around the related words

- Connect the original network to the new one

- Repeat

To sonify the resulting networks, I used a simple model of artificial intelligence that is sort of like a cellular automaton. I released a swarm of simple automata on the network and turned on sounds whenever the number of bots on a sound reached a critical mass.

Results

Here are the results of my dissertation. You can read a paper I presented at a conference, and the dissertation itself. Then you can listen to the music written by this program. It's not Beethoven, but I guarantee that you will find it interesting.

Composing with All Sound Using the FreeSound and Wordnik APIs

Method for Simulating Creativity to Generate Sound Collages from Documents on the Web

Disconnected: Algorithmic music composed using all sounds and a network model of creativity

When I'm feeling down...

Sometimes when I'm feeling bad about myself I remember that someone spent the time to rip and upload the music I wrote for a flash game nearly two decades ago. It was for a game called Arrow of Time. And it kicked ass.