It's really difficult to write about E. M. Forster

When I started getting into A Room with a View a few years back, I kept reading it over and over again. In each reading, I would find something new. Something would be changed, revealed, or transmuted into something new. It was fascinating. But there were still things that I didn't understand. Some of the places and artists were obscure. Some of the referenced literature was very obscure.

I started taking notes on the novel. Whenever I came across something that was obscured by distance, time, or education, I would look it up online and produce a little note to myself about it. Eventually, I had enough of these to think, ‘hey, I should share these with other people!'

That's where things got tricky. After all, I don't want to share spurious or incorrect notes. I want to share notes that increase the enjoyment of the book for casual readers and fans. But how can I know if one of my notes is incorrect? How can I be sure that what I'm pointing out isn't very subjective or obvious?

So I thought I should at least read his other novels to get some context. I read his first novel first. Where Angels Fear to Tread is, in most respects, not a great novel. But it does begin to reveal Forster's unique approach to realism. Next I picked up The Longest Journey. That book is truly boring. It was a slog to get through, and I don't know how anyone enjoys it without knowing a lot about Forster's biography.

Then I read The Machine Stops, which is fabulous and unique and ahead of its time. So I thought maybe Forster had a particular gift for short fiction? So I read all of his short fiction that was in collections (which I know now is not all his short fiction). It was excellent, but there wasn't very much of it. So I went back to the novels. I finished with Howard's End, A Passage to India, and Maurice, each of which is a masterpiece in its own way.

Then I went back to my original task. I could finally say something about Forster's most popular book from a position of authority, right?

Well, no. I soon learned that Forster produced even more essays and non-fiction than he produced fiction. I read his guide to Alexandria, and some of his essay collections. I couldn't get through it all, and some of his collections are difficult to acquire these days.

Then I thought I should read a biography. So I read Wendy Moffat's excellent book.

At this point, I'm years away from my original task. I have more or less forgotten that I ever wanted to say anything about Forster and his most famous novel. Still, I've realized that he also wrote a lot of letters, and the selected letters are available in bound collections. I'm duty-bound to read them, right?

I read as much of the letters as I could, but I think you can see the problem. It's really hard to say anything with authority about a writer who produced such a massive volume of words as EM Forster. The novels, essays, non-fiction, lectures, and letters amount to such a vast quantity of work that I would put it up against even the most fecund modern novelists. It's unbelievable.

Then there's the critics, biographers, and academics. I can see how a grad student could get very dis-heartened. How can you hope to add to the vast discourse on such a popular, beloved author?

I don't know if I will ever finish taking notes on A Room with a View (and now Forster's other novels too). All I know at this point is that the journey has become the goal. Reading and studying Forster's work and the work about Forster has become a little hobby of mine. Maybe I won't ever say anything about Forster, but I'll have a lot of fun not saying it!

SoundCloud, I love you, but you're terrible

I finally started using SoundCloud for a new electro project called Fynix. I casually used it in the past under my own name, in order to share WIP tracks, or just odd stuff that didn't fit on bandcamp. But I never used it seriously until recently. Now I am using it every day, and trying to connect with other artists. I am remixing one track a week, listening to everything on The Upload, and liking/commenting as much as I can.

SoundCloud is the best social network for musicians right now. But it still has a terrible identity crisis. Most of the services seem to be aimed at listeners, or aimed at nobody in particular.

So in this post, I'm going to vent about SoundCloud. It's a good platform, but with a few changes it could be great.

1. I am an artist. Stop treating me like a listener.

Is it really that difficult for you to recognize that I am a musician, and not a listener? I've uploaded 15 tracks. It seems like a pretty simple conditional check to me. So why is my home feed cluttered up with reposts? Why can't I easily find the new tracks by my friends?

This is the core underlying problem with SoundCloud. It has two distinct types of users, and yet it treats all users the same.

2. Your "Who to Follow" recommendations suck. They REALLY suck.

I've basically stopped checking "Who to Follow" even though I want to connect with as many musicians as possible. The recommendations seem arbitrary and just plain stupid.

The main problem is that, as a musician, I want to follow other musicians. I want to follow people who will interact with me, and who will promote my work as much as I promote theirs. Yet, the "Who to Follow" list is full of seemingly random people.

Is this person from the same city as me? No. Do they follow lots of people / will they follow back? No. Are they working in a genre similar to mine? No. Do they like and comment on lots of tracks? No.

So why the heck would I want to follow them?

3. Where are my friends latest tracks?

This last one is just infuriating. When I log in, I want to see the latest tracks posted by my friends. So I go to my homescreen, and it is pure luck if I can find something posted by someone I actually talk to on SoundCloud. It's all reposts. Even if I unfollow all the huge repost accounts, I am stuck looking at reposts by my friends, rather than their new tracks.

Okay, so let's click the dropdown and go to the list of users I am "following". Are they sorted by recent activity? No. They are sorted by the order in which I followed them. To find out if they have new tracks, I must click on them individually and check their profiles. Because that is really practical.

Okay, so maybe there's a playlist of my friends tracks on the Discover page? Nope. It's all a random collection of garbage.

As far as I can tell, there is no way for me to listen to my friends' recent tracks. This discourages real interactions.

Ultimately, the problem is data, and intelligence. SoundCloud has none. You could blame design for these problems. The website shows a lack of direction, as if committees are leading the product in lots of different directions. SoundCloud seems to want to focus on listeners, to compete in the same space as Spotify.

But even if that's the case, it should be trivial to see that I don't use the website like a regular listener. I use it like a musician. I want to connect and interact with other musicians.

And this is such a trivial data/analytics problem that I can only think that they aren't led by data at all. Maybe this is just what I see because I lead our data team, but it seems apparent to me that data is either not used, or used poorly in all these features.

For instance, shouldn't the "Who to Follow" list be based on who I have followed in the past? I've followed lots of people who make jazz/electro music, yet no jazz/electro artists are in my "Who to Follow" list. I follow people who like and comment on my tracks, yet I am told to follow people who follow 12 people and have never posted a comment.

The most disappointing thing is that none of this is hard.

4. Oh yeah, and your browser detection sucks.

When I am browsing your site on my tablet, I do not want to use the app. I do not want your very limited mobile site. I just want the regular site (and yes, I know I can get it with a few extra clicks, but it should be the default).

Tips for Managing Joins in Looker

Looker is a fantastic product. It really makes data and visualizations much more manageable. The main goal of Looker is to allow people who aren't data analysts to do some basic data analysis. To some extent, it achieves this, but there are limits to how far this can go. Ultimately, Looker is a big graphical user interface for writing SQL and generating charts. Under-the-hood, it's programmable by data engineers, but it's limited by the fact that non-technical users are using it.

The major design challenge for Looker is joins. A data engineer writes the joins into what Looker calls "explores". Explores are rules for how data can be explored, but ultimately just a container for joins. When someone creates a new chart, they start by selecting an explore, and thus selecting the joins that will be used in the chart.

They pick the join from a dropdown under the word "Explore". This is the main design bottleneck. Such a UI encourages users to have only a limited number of joins that can fit in the vertical resolution of the screen. This means limiting the number of explores, and hence limiting the ways tables are joined. This encourages using pre-existing joins for new charts.

This creates two problems.

- A non-technical user will not understand the implication of choosing an explore. They may not see that the explore they chose limits how the data can be analyzed. In fact, a non-savvy user may pick the wrong explore entirely, and create a chart that is entirely wrong.

- The joins may evolve over time. A programmer might change a join for a new chart, and this may make old charts incorrect.

The problem is that SQL joins are fundamentally interpretations of the data. Unless a join occurs on id fields AND is a one-to-one relationship, then a join interprets the data in some way.

So how can you limit the negative impact of re-using joins?

1. Encourage simple charts

Encourage your teammates to make charts as simple as possible. If possible, a chart should show a single quantity as it changes over a single dimension. This should eliminate or minimize the use of joins in the chart, thus making it far more future-proof.

2. Give explores long, verbose names

Make explore names as descriptive as possible. Try to communicate the choice that a user is making when they choose an explore. For instance, you might name one explore "Products Today" and another one "Product Events Over Time". These names might indicate that the first explore looks at the products table, but the second explore shows events relating to products joined with a time dimension.

One of the mistakes I made while first starting out with Looker is naming the explores with single word names. I now see that short names create maintenance nightmares. Before assessing the problems with a given chart, I need to know which explore the maker chose for it, and because the names were selected so poorly, the choice was often incorrect.

I hope these ideas help you find a path to a maintainable data project. To be honest, I have a lot of digging-out to do!

Pride in Software Craftsmanship

As I spend more and more time in Silicon Valley, my views on software management are changing. I read Radical Candor recently, and while I agree with everything in it, I feel like it over-complicates things.

This meditation has been pushed in part by my passion for food. I like going to new restaurants. It brings me joy to try something new, even if it's not a restaurant that would ever be considered for a Michelin Star. Even crappy looking restaurants can serve great food.

I am often awed by the disconnect between various parts of the restaurant business and the quality of the food. Some restaurants are spotlessly clean, have have beautiful decor, and amazing service… but the food is mediocre. The menu is bland and uninspired, and the food itself is prepared with all the zeal that a minimum wage employee can manage.

Then I'll go to a dirty looking greek joint down the road, and the service will be awful… but the menu is inspired. It's not the standard "greek" menu, but it's got little variations on the dishes. And when the food comes out (finally), maybe it isn't beautiful on the plate, but the flavors come together to make something greater than the ingredients and the recipe.

What seems to distinguish a good restaurant from a crappy one is pride. At restaurants that I return to, there is someone there, maybe a manager, maybe a cook, maybe the chef who designed the menu, who takes great pride in his work.

There's a diner by my old house, for instance, where the food is … diner food. There's no reason to go back to the restaurant… except for the manager. The man who runs the floor, seats the patrons, deals with the kitchen, and does all the little things that make a restaurant tick. He manages to make that particular diner worth going to. And for a guy who has two young kids, that's terrific.

I am starting to think that the same basic principle applies to software engineers. I've met brilliant engineers with all sorts of characteristics. Some of them have a lot of education and read all the latest guides. Others have little education, and don't read at all. The main thing that makes them good engineers is that they take pride in their work. They care about the quality of their work, regardless of how many people are going to use it, or how much time they put into it. They write quality code because their work matters.

So when it comes to managing software projects, I'm starting to think that all of these systems boil down to two basic steps.

- Put your engineers in a position to take pride in their work.

- Get out of the way.

Obviously, the first step is non-trivial. It's why there are so many books on the topic. But at the end of the day, pride is what matters.

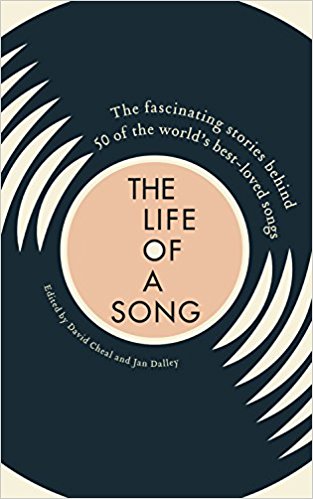

Book Review: Life of a Song

I recently had the chance to read Life of a Song: The fascinating stories behind 50 of the worlds best-loved songs. It's a concise collection of fifty Life of a Song articles from the Financial Times. As I rarely have a reason to visit the FT website, and I only occasionally catch the Life of a Song podcast, the book was a great opportunity to catch up on what I'd missed. Regular readers may find nothing new in the book, but for pop fans and die-hard listeners, the short collection is definitely worth a read.

The book consists of fifty articles from the regular Life of a Song column collected into book form. Each article takes on a different, well-loved tune from twentieth century popular music. Songs covered include ‘My Way', ‘Midnight Train to Georgia', ‘1999', ‘La Vie en Rose', and ‘This Land is Your Land'. There are only a few songs in the list that I didn't know off the top of my head, including ‘Song to the Siren', and ‘Rocket 88'. The articles usually include some remarks about the songwriter, often quoting them about their creation. Then they cover the journey from composition to hit recording, and usually mention other interpretations that followed the hit.

Each article appears to be less than 1000 words. As you might expect, that's a lot to cover in that much room. So each article is pretty topical, relating a single anecdote about it, and only touching on the rest. For instance, in the article about ‘Like a Rolling Stone', the author relates the recording process that shaped the final sound.

On take four of the remake, serendipity strikes. Session guitarist Al Kooper, 21, a friend of the band, walks in holding his guitar, hoping to join in. He is deemed surplus to requirements, but Dylan decides he wants an organ in addition to piano, and Kooper volunteers to fill in. He improvises his part, as he would later recall, ‘like a little kid fumbling in the dark for a light switch'. And suddenly the song turns into the tumbling, cascading version that will become the finished article.

There's two pieces of information that you need to know about this book in order to enjoy it.

- It is a collection of short articles by many contributors.

- Those writers are almost entirely arts journalists, rather than trained musicians.

This book was written by a lot of authors. I counted fourteen contributors, each of whom appears to be an English journalist. This can lead to the book feeling somewhat disjointed. Each author is comfortable talking about their own domain of the music industry. Some interpret the lyrics, others relate interviews with creators, others pick up on business maneuvers behind the scenes.

In the introduction, David Chael and Jan Dalley write that the book "is not about singers, or stars, or chart success – although of course they come into the story. It is about the music itself". If you are a musician, this may leave you expecting musical analysis, lyrical breakdowns, or at least comparisons to similar songs. The book "is about music" in as much as it tells stories about musicians, but it is strictly an outsiders perspective. There's no illusion that the writers were part of the culture of the song, or involved themselves with the people in the story. A reader shouldn't expect that in a collection such as this.

My favorite article is the one about ‘Midnight Train to Georgia'. That song has so much soul, that it surprised me to learn that the original title, given to the tune by its white songwriter, was ‘Midnight Plane to Houston'.

The soul singer Cissy Houston… decided to record its first cover version… But the title irked. It wasn't the collision of Houstons – singer and subject – that bothered her, but one of authenticity. If she was going to sing this song, she had to feel it. And, she later said, ‘My people are originally from Georgia and they didn't take planes to Houston or anywhere else. They took trains.'

Ultimately, Life of a Song is a great book to read on the way to and from work, or to sit in your book bin next to your favorite chair. It's a book that can be read in lots of small chunks, and each chunk reveals a little bit more about a song than the recording.

Now if you don't mind, I need to catch a plane to Houston.