Tagged "coding"

Sonifying Processing

Sonifying Processing shows students and artists how to bring sound into their Processing programs. It takes a hands-on approach, incorporating examples into every topic that is discussed. Each section of the book explains a sound programming concept then demonstrates it in code. The examples build from simple synthesizers in the first few chapters, to more complex sound-manglers as the book progresses. Each step of the way is examined at a level that is simple enough for new learners, and comfortable for more experienced programmers.

Topics covered include Additive Synthesis, Frequency Modulation, Sampling, Granular Synthesis, Filters, Compression, Input/Output, MIDI, Analysis and everything else an artist may need to bring their Processing sketches to life.

"Sonifying Processing is a great introduction to sound art in Processing. It's a valuable reference for multimedia artists." - Beads Creator Oliver Bown

Sonifying Processing is available as a free pdf ebook, in a Kindle edition, or in print from Amazon.com.

Downloads

Sonifying Processing The Beads Tutorial

The Beads Library was created by Oliver Bown, and can be downloaded at http://www.beadsproject.net/.

Press

Sonifying Processing on Peter Kirn's wonderful Create Digital Music

Swarm Intelligence in Music in The Signal Culture Cookbook

The Signal Culture Group just published The Signal Culture Cookbook. I contributed a chapter titled "The Mapping Problem", which deals with issues surrounding swarm intelligence in music and the arts. Swarm intelligence is a naturally non-human mode of intelligent behavior, so it presents some unique problems when being applied to the uniquely human behavior of creating art.

The Signal Culture Cookbook is a collection of techniques and creative practices employed by artists working in the field of media arts. Articles include real-time glitch video processing, direct laser animation on film, transforming your drawing into a fake computer, wi-fi mapping, alternative uses for piezo mics, visualizing earthquakes in real time and using swarm algorithms to compose new musical structures. There's even a great, humorous article on how to use offline technology for enhancing your online sentience – and more!

And here's a quote from the introduction to my chapter.

Some composers have explored the music that arises from mathematical functions, such as fractals. Composers such as myself have tried to use computers not just to imitate the human creative process, but also to simulate the possibility of inhuman creativity. This has involved employing models of intelligence and computation that aren't based on cognition, such as cellular automata, genetic algorithms and the topic of this article, swarm intelligence. The most difficult problem with using any of these systems in music is that they aren't inherently musical. In general, they are inherently unrelated to music. To write music using data from an arbitrary process, the composer must find a way of translating the non-musical data into musical data. The problem of mapping a process from one domain to work in an entirely unrelated domain is called the mapping problem. In this article, the problem is mapping from a virtual swarm to music, however, the problem applies in similar ways to algorithmic art in general. Some algorithms may be easily translated into one type of art or music, while other algorithms may require complex math for even basic art to emerge.

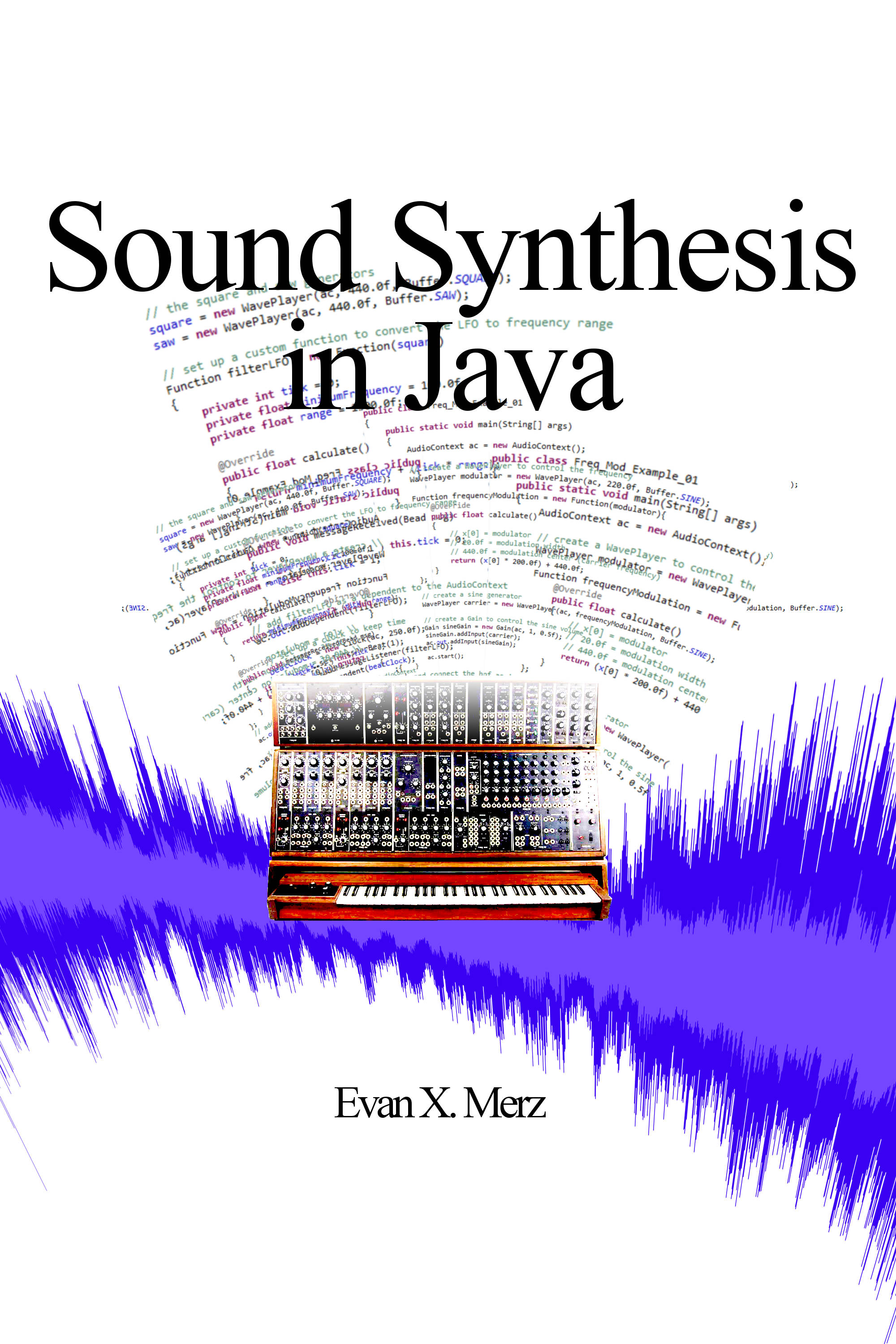

Sound Synthesis in Java

Sound Synthesis in Java introduces sound synthesis concepts using the most widely taught programming language in the world, Java. Using the Beads library, it walks readers through the basics of sound generating programs all the way up through imitations of commercial synthesizers. In eleven chapters the book covers additive synthesis, modulation synthesis, subtractive synthesis, granular synthesis, MIDI keyboard input, rendering to audio files and more. Each chapter includes an explanation of the topic and examples that are as simple as possible so even beginning programmers can follow along. Part two of the book includes six projects that show the reader how to build arpeggiators, imitate an analog synthesizer, and create flowing soundscapes using granular synthesis.

Sonifying Processing is available for free online.

Read Online

Read Sound Synthesis in Java online. The source code is available from links in the text.

Why are Side Effects Bad?

Side effects are any observable change to the state of an object. Some people might qualify this by saying that side effects are implicit or unintended changes to state.

Mutator methods, or setters, which are designed to change the state of an object, should have side effects. Accessor methods, or getters, should not have side effects. Other methods should generally try to avoid changing state beyond what is necessary for the intended task.

Why are these guidelines best practices though? What makes side effects so bad? Students have asked me this question many times, and I've worked with many experienced programmers who don't seem to understand why minimizing side effects is a good goal.

For example, I recently commented out a block of my peer's code because it had detrimental side effects. The code was intended to add color highlighting to log entries to make his debugging easier. The problem with the code was that the syntax highlighting xml was leaking into our save files. He was applying the highlight to a key, then using that key both in the log and in the save file. Worse still, he wrote this code so that it only occurred on some platforms. When I was debugging a cross platform feature, I got very unpredictable behavior and ultimately traced it back to this block.

This is an example where code was intended for one purpose, but it also did something else. That something else is the side effect, and you can see how it caused problems for other developers. Since his change was undocumented and uncommented, I spent hours tracking it down. As a team, we lost significant productivity due to a side effect.

Side effects are bad because they make a code base less agile. Side effects cause bugs that are difficult to find, and lead to code that is more difficult to maintain.

Before I continue, let me be clear that all code style guidelines should be broken sometimes. For each situation, a guideline or design pattern may be better or worse, and I recognize that we are always working in shades of gray.

Generally, a block of code should be written for one purpose. If it is a method, then it should do one thing. If another thing needs to be done with an object, then that should be encapsulated in another method.

Here's a hypothetical example that I've seen played out hundreds of times in my career.

A class needs to do task X. A programmer may write a method to do task X, but he accidentally includes logic that also does task Y. Later, he may see that he needs to do task Y all alone. So he writes a method to do task Y. That's where the problem is compounded.

Later still, the definition of task Y changes. So another programmer has to rewrite task Y. He goes to the class, changes the method for task Y alone, does a few quick tests, and proceeds on his merry way.

Then mysterious bugs start occurring. QA can't really track them down to one thing because they only occur sporadically after task Y. Finally, it takes many man-hours to remove task Y from the method for task X.

In this example, the side effect led to code duplication, which led to trouble when updating the code, which led to bugs that cost many hours to track down. The fewer side effects you introduce, the easier your code will be to maintain.

These two examples show how side effects can derail development and why they are so inimical. We all write code with side effect occasionally, but it's our job to figure out how to do it in a way that doesn't make the code more difficult to maintain.

How Can Comments be Controversial?

Comments add information to your code. They don't impact the execution of that code. So how can they be bad? I believe that more commenting is better, and that comments are a vital tool in maintaining an agile codebase, but I've met many experienced developers who argue with me about comments.

The Pragmatic Programmer is an excellent book. It outlines best practices that have solidified over the last thirty years or so as software development has grown as a field. The authors share these practices as a series of tips that are justified by real world anecdotes. In a section on comments the authors say

"The DRY principle tells us to keep the low-level knowledge in the code, where it belongs, and reserve the comments for other, high-level explanations. Otherwise, we're duplicating knowledge, and every change means changing both the code and the comments. The comments will inevitably become out of date, and untrustworthy comments are worse than no comments at all"

They make the point that obsolete comments are worse than no comments at all. This is undoubtably true, but it has been bastardized by many programmers as an excuse for not commenting. I've heard this excuse, along with two others to justify a lack of comments in code.

- The code is self-commenting.

- Out of date comments are worse than no comments.

- You shouldn't rely on comments.

In this post I want to address each of these points. I think that thorough commenting speeds up the rate of consumption of code, and that obsolete comments are a failure in maintenance, rather than in comments in general.

1. The code is self-commenting

This is the excuse I hear most often from developers who don't comment their code. The thinking is that clear variable and method names replace the need for comments. There are situations where this is true. For very short methods with a single purpose, I see that no additional comments may be necessary.

But most methods are not very short, and most methods achieve several things that relate to one overall purpose. So in the first place, very few methods fit the bill.

This is not a valid complaint because good comments only make the code easier to read. While the code may be legible on its own, a comment that explains the purpose of a method, or the reason for a string operation, can only make it easier to read. The author of Clean Code agrees due to the amount of time we spend reading code.

"the ratio of time spent reading vs. writing is well over 10:1. We are constantly reading old code as part of the effort to write new code. Because this ratio is so high, we want the reading of code to be easy, even if it makes the writing harder."

Because we spend more time reading code than writing it, a responsible programmer knows that the extra comment in a method which takes very little time to write may well save another programmer many hours of learning.

2. Out of date comments are worse than no comments

To refute this argument, lets take cars as an analogy. If you buy a car, then drive it for twenty thousand miles without an oil change, it will break down. If you did that, the fault wouldn't be in cars in general. Rather, the fault is in the lack of maintenance.

Similary, obsolete comments are not a failure of comments in general, but a failure of the programmer who updated the code without updating the comments. That other programmers have bad practices does not justify continuing that bad practice.

Obsolete comments are a broken window. If you see them, it is your job to fix them.

So while obsolete comments ARE worse than no comments, that argument is an argument against bad coding practices, not comments in general.

3. You shouldn't rely on comments

The argument that a programmer shouldn't rely on comments is a subtle dig at another programmer's skill. They're saying "a good programmer can understand this code with no comments."

There is a grain of a valid point there. If you consider yourself a seasoned programmer, then you should be accustomed to wading through large volumes of old code and parsing out the real meaning and functionality.

But even for veteran programmers, comments make it easier to read and understand the code. It's much easier to interpret a one line summary of a block of code than to parse that block line by line. It's much easier to store that one line in your head than it is to store the whole block in your head.

The real reason why this is the worst of excuses is that not all programmers working on your code are senior programmers. Some are green graduates straight out of college. Yes, a more experienced programmer could understand the code without comments, but it will cost that graduate hours to figure out what they could have gotten in minutes with good comments.

My Commenting Principle

Comment your code so that a green programmer can understand it.

Or…

Comment your code so that your boss can understand it.

What does it take to make your code readable by a green graduate? Imagine them going through it line by line. If there are no comments, then they need to understand every function call, and every library routine. They need to understand a bit about your architecture and date model.

Now imagine that every single line is commented.

In that scenario, a green programmer can read your file by reading one comment after the next. If they need more information, they can still drill into the code and get the details, but they can understand the overview just through comments.

The closer you can get to that, the better.

Don't listen to the arguments against thorough commenting habits. Just point the detractors here.

When Code Duplication is not Code Duplication

Duplicating code is a bad thing. Any engineer worth his salt knows that the more you repeat yourself, the more difficult it will be to maintain your code. We've enshrined this in a well-known principle called the DRY principle, where DRY is an acronym standing for Don't Repeat Yourself. So code duplication should be avoided at all costs. Right?

At work I recently came across an interesting case of code duplication that merits more thought, and shows how there is some subtlety needed in application of every coding guideline, even the bedrock ones.

Consider the following CSS, which is a simplified version of a common scenario.

.title {

color: #111111;

}

.text-color-gray-1 {

color: #111111;

}

This looks like code duplication, right? If both classes are applying the same color, then they do the same thing. If the do the same thing, then they should BE the same thing, right?

But CSS and markup in general presents an interesting case. Are these rules really doing the same thing? Are they both responsible for making the text gray? No.

The function of these two rules is different, even though the effect is the same. The first rule styles titles on the website, while the second rule styles any arbitrary div. The first rule is a generalized style, while the second rule is a special case override. The two rules do fundamentally different things.

Imagine a case where we optimized those two classes by removing the title class and just using the latter class. Then the designer changes the title color to dark blue. To change the title color, the developer now has to replace each occurrence of .text-color-gray-1 where it styles a title. So, by optimizing two things with different purposes, the developer has actually made more work.

It's important to recognize in this case that code duplication is not always code duplication. Just because these two CSS classes are applying the same color doesn't mean that they are doing the same thing. In this case, the CSS classes are more like variables than methods. They hold the same value, but that is just a coincidence.

What looks like code duplication is not actually code duplication.

But… what is the correct thing?

There is no right answer here. It's a complex problem. You could solve it in lots of different ways, and there are probably three or four different approaches that are equally valid, in the sense that they result in the same amount of maintenance.

The important thing is not to insist that there is one right way to solve this problem, but to recognize that blithely applying the DRY principle here may not be the path to less maintenance.

Single File Components are Good

I was reading the Vue.js documentation recently, and I came across a very compelling little passage. The passage was explaining the thinking behind single file components. In a single file component, the template, javascript logic, and perhaps even styles, are all collocated in the same file.

Putting all the parts of a component into a single file may seem odd to some programmers. After all, shouldn't we be separating logic from templates? Shouldn't we be separating styles from logic too?

And the answer is that the right thing to do is situation dependent, and this is something that a lot of modern programmers don't understand. A lot of programmers seem to think that there are rules, and you must follow the rules. They don't realize that rules should only be followed when they are helping you.

So the core question is this: is separating a single view into several files really helping your development process?

Here's what the Vue documentation says.

One important thing to note is that separation of concerns is not equal to separation of file types. In modern UI development, we have found that instead of dividing the codebase into three huge layers that interweave with one another, it makes much more sense to divide them into loosely-coupled components and compose them. Inside a component, its template, logic and styles are inherently coupled, and collocating them actually makes the component more cohesive and maintainable.

So the argument from the Vue developers is that keeping all the code related to one view inside one file is actually more maintainable than separating them.

I tentatively agree with this.

Obviously, it's good to separate certain things out into their own files. Foundational CSS styles and core JS helper methods should have their own place. But for all the code that makes a component unique, I think it's easiest for developers if that is in one file.

Here are the problems I face with breaking a view into multiple files.

1. What is each file called? Where is it located?

When you separate a view into multiple files, it becomes difficult to find each file. It's fine to have a standard for naming and locating files, but in any large software project, there are going to be situations where the standard can't be followed for one reason or another. Maybe the view was created early in development. Maybe the view is shared. Or maybe the view was created by a trainee developer and the mis-naming was missed in the code review. Whatever the cause, file names and locations can be stumbling blocks.

At my workplace, we have hundreds of templates named item.js.hamlbars. That's not very helpful, but what's more troublesome is when, for whatever reason, a list item couldn't be named item.js.hamlbars. In that case, I'm left searching for files with whatever code words I can guess.

2. Where is the bug?

When you separate a view into multiple files, you don't know where a bug may be coming from. This is especially troublesome if you are in a situation where conditional logic is being put into the templates. Once you have conditional logic in the templates, then you can't know if the bug is in the javascript or in the template itself. Furthermore, it then takes developer time to figure out where to put the fix.

Single File Components are a Bonus

Putting all the code for a single view into a single file is not only logical, but it also doesn't violate the separation-of-concerns ethos. While the pieces are all in the same file, they each have a distinct section. So this isn't the bad old days of web development, where javascript was strewn across a page in any old place. Rather, collocating all the code files for a single view is a way to take away some common development headaches.

Redux in one sentence

Redux is a very simple tool that is very poorly explained. In this article, I will explain Redux in a single sentence that is sorely missing from the Redux documentation.

Redux is a pattern for modifying state using events.

That's it. It's a dedicated event bus for managing application state. Read on for a more detailed explanation, and for a specific explanation of the vocabulary used in Redux.

1. Redux is a design pattern.

The first thing you need to know is that Redux is not really software per se. True, you can download a Redux "library", but this is just a library of convenience functions and definitions. You could implement a Redux architecture without using Redux software.

In this sense, Redux is not like jQuery, or React, or most of the libraries you get from npm or yarn. When you learn Redux, you aren't learning one specific thing, but really just a group of concepts. In computer science, we call concepts like this a design pattern. A design pattern is a group of concepts that simplify an archetypal task (a task that re-occurs in software development). Redux is a design pattern for managing data in a javascript application.

2. Redux is an extension of the Observer Pattern.

I think this is the only bullet point needed to explain Redux to experienced developers.

The observer pattern is the abstract definition of what programmers refer to as events and event listeners. The observer pattern defines a situation where one object is listening to events triggered by another object.

In javascript, we commonly use the observer pattern when we wire up onClick events. When you set up an onClick event, you are saying that an object should listen to an HTML element, and respond when it is clicked. In this scenario, we are usually managing the visual state of the page using events. For example, a switch is clicked and it must respond by changing color.

Redux is a dedicated event bus for managing internal application state using events.

So redux is a way of triggering pre-defined events to modify state in pre-determined ways. This allows us to ensure that state isn't being modified in ad hoc ways and it allows us to examine the history of application state by looking at the sequence of events that led to that state.

3. Actions are event definitions. Reducers are state modifiers.

The new vocabulary used by Redux makes it seem like it's something new, when it's really just a slight twist on existing technology and patterns.

Action = Event definition. Like events in any language, events in Redux have a name and a payload. Events are not active like classes or methods. They are (usually) passive structures that can be contained or transmitted in json.

Reducer = Event listener. A reducer changes application state in response to an action. It's called a Reducer because it literally works like something you would pass to the reduce function.

Dispatch = Trigger event. The dispatch method can be confusing at first, but it is just a way of triggering the event/action. You should think of it like the methods used to trigger exceptions in most languages. After all, exceptions are just another variation on the Observer pattern. So dispatch is like throw in Java or raise in Ruby.

4. Redux manages local state separately from the backend.

Redux is a pattern for managing only the internal state of the application. It does not dictate anything about how you talk to your API, nor does it dictate the way that your internal state should be connected to the backend state.

It's a common mistake to put the backend code directly into the Redux action or reducer. Although this can seem sensible at times, tightly coupling the local application state with backend interactions is dangerous and often undesirable. For instance, a user may toggle a switch three or four times just to see what it does. Usually you want to update local state immediately when they hit the switch, but you only want to update the backend after they have stopped.

Conclusion

Redux is not complex. It is a dedicated event bus for managing local application state. Actions are events. Reducers modify state. That's it. I hope that helps.

Here are a few other useful links:

https://alligator.io/redux/redux-intro/ https://www.tutorialspoint.com/redux/redux_core_concepts.htm https://redux.js.org/introduction/core-concepts/

Glitching Images in Processing

This summer I'm going to release a new album of solo electronic music that is heavily influenced by EDM and classic rock. For the past few weeks I've been trying to figure out what to do about the art on the new album.

The album is called "FYNIX Fights Back" so I decided to use images of animals fighting, but I didn't just want to pull up generic images. I wanted to add my own special touch to each image. So I pulled out a tool that I haven't used in years: the Processing programming language.

Processing is great for simple algorithmic art. In this case I wanted to glitch some images interactively, but I wasn't exactly sure what I wanted to do.

So I just started experimenting. I played with colors and shapes and randomness. I like to derive randomess based on mouse movement. The more the mouse moves, the more randomness is imparted to whatever the user is doing.

I added image glitching based on mouse speed. The quicker the cursor moves, the more random are the generated shapes, and the more they are offset from their source position in the original image.

Here's the end result.

Here's the source code. Make it even better.

import java.awt.event.KeyEvent;

// relationship between image size and canvas size

float scalingFactor = 2.0;

// image from unsplash.com

String imageFilename = "YOUR_FILE_IN_DATA_FOLDER.jpg";

// image container

PImage img;

PImage scaledImage;

int minimumVelocity = 40;

int velocityFactorDivisor = 5; // the larger this is, the more vertices you will get

int velocityFactor = 1; // this will be overridden below

float minimumImageSize = 10.0f;

boolean firstDraw = true;

int currentImage = 1;

void settings() {

// load the source image

img = loadImage(imageFilename);

// load the pixel colors into the pixels array

img.loadPixels();

// create a canvas that is proportional to the selected image

size((int)(img.width / scalingFactor), (int)(img.height / scalingFactor));

// scale the image for the window size

scaledImage = loadImage(imageFilename);

scaledImage.resize(width, height);

// override velocityFactor

velocityFactor = (int)(width / velocityFactorDivisor);

}

void setup() {

// disable lines

noStroke();

}

void keyPressed() {

if(keyCode == KeyEvent.VK_R) {

firstDraw = true; // if the user presses ENTER, then reset

}

}

void draw() {

if(firstDraw) {

image(scaledImage, 0, 0);

firstDraw = false;

}

// right click to render to image file

if(mousePressed && mouseButton == RIGHT) {

save(imageFilename.replace(".jpg", "") + "_render_" + currentImage + ".tga");

currentImage++;

}

if(mousePressed && mouseButton == LEFT && mouseX >= 0 && mouseX < width && mouseY >= 0 && mouseY < height) {

int velocityX = minimumVelocity + (3 * velocity(mouseX, pmouseX, width));

int velocityY = minimumVelocity + (3 * velocity(mouseY, pmouseY, height));

color c = img.pixels[mousePositionToPixelCoordinate(mouseX, mouseY)];

int vertexCount = ((3 * velocityFactor) + velocityX + velocityY) / velocityFactor;

int minimumX = mouseX - (velocityX / 2);

int maximumX = mouseX + (velocityX / 2);

int minimumY = mouseY - (velocityY / 2);

int maximumY = mouseY + (velocityY / 2);

PGraphics pg = createGraphics(maximumX - minimumX, maximumY - minimumY);

pg.beginDraw();

pg.noStroke();

pg.fill(c);

// first draw a shape into the buffer

pg.beginShape();

for(int i = 0; i < vertexCount; i++) {

pg.vertex(random(0, pg.width), random(0, pg.height));

}

pg.endShape();

pg.endDraw();

pg.loadPixels();

// then copy image pixels into the shape

// get the upper left coordinate in the source image

int startingCoordinateInSourceImage = mousePositionToPixelCoordinate(minimumX, minimumY);

// get the width of the source image

int sourceImageWidth = (int)(img.width);

// set the offset from the source image

int offsetX = velocity(mouseX, pmouseX, width);

int offsetY = velocity(mouseY, pmouseY, height);

// ensure that the offset doesn't go off the canvas

if(mouseX > width / 2) offsetX *= -1;

if(mouseY > height / 2) offsetY *= -1;

for(int y = 0; y < pg.height; y++) {

for(int x = 0; x < pg.width; x++) {

// calculate the coordinate in the destination image

int newImageY = y * pg.width;

int newImageX = x;

int newImageCoordinate = newImageX + newImageY;

// calculate the location in the source image

//int sourceImageCoordinate = (int)(startingCoordinateInSourceImage + (x * scalingFactor) + ((y * scalingFactor) * sourceImageWidth));

//int sourceImageCoordinate = (int)(startingCoordinateInSourceImage + ((x + offsetX) * scalingFactor) + (((y + offsetY) * scalingFactor) * sourceImageWidth));

int sourceImageX = (int)(((x + offsetX) * scalingFactor));

int sourceImageY = (int)((((y + offsetY) * scalingFactor) * sourceImageWidth));

int sourceImageCoordinate = (int)(startingCoordinateInSourceImage + sourceImageX + sourceImageY);

// ensure the calculated coordinates are within bounds

if(newImageCoordinate > 0 && newImageCoordinate < pg.pixels.length

&& sourceImageCoordinate > 0 && sourceImageCoordinate < img.pixels.length

&& pg.pixels[newImageCoordinate] == c) {

pg.pixels[newImageCoordinate] = img.pixels[sourceImageCoordinate];

}

}

}

image(pg, minimumX, minimumY);

}

}

// convert the mouse position to a coordinate within the source image

int mousePositionToPixelCoordinate(int mouseX, int mouseY) {

return (int)(mouseX * scalingFactor) + (int)((mouseY * scalingFactor) * img.width);

}

// This sort of calculates mouse velocity as relative to canvas size

int velocity(float x1, float x2, float size) {

int val = (int)(Math.abs((x1 - x2) / size) * size);

return val;

}

How to render synchronous audio and video in Processing in 2021

About a decade ago I wrote a blog post about rendering synchronous audio and video in processing. I posted it on my now-defunct blog, computermusicblog.com. Recently, I searched for the same topic, and found that my old post was one of the top hits, but my old blog was gone.

So in this post I want to give searchers an updated guide for rendering synchronous audio and video in processing.

It's still a headache to render synchronous audio and video in Processing, but with the technique here you should be able to copy my work and create a simple 2-click process that will get you the results you want in under 100 lines of code.

Prerequisites

You must install Processing, Minim, VideoExport, and ffmpeg on your computer. Processing can be installed from processing.org/. Minim and VideoExport are Processing libraries that you can add via Processing menus (Sketch > Import Library > Add Library). You must add ffmpeg to your path. Google how to do that.

The final, crappy prerequisite for this particular tutorial is that you must be working with a pre-rendered wav file. In other words, this will work for generating Processing visuals that are based on an audio file, but not for Processing sketches that synthesize video and audio at the same time.

Overview

Here's what the overall process looks like.

- Run the Processing sketch. Press q to quit and render the video file.

- Run ffmpeg to combine the source audio file with the rendered video.

Source Code

Without further ado, here's the source. This code is a simple audio visualizer that paints the waveform over a background image. Notice the ffmpeg instructions in the long comment at the top.

/*

This is a basic audio visualizer created using Processing.

Press q to quit and render the video.

For more information about Minim, see http://code.compartmental.net/tools/minim/quickstart/

For more information about VideoExport, see https://timrodenbroeker.de/processing-tutorial-video-export/

Use ffmpeg to combine the source audio with the rendered video.

See https://superuser.com/questions/277642/how-to-merge-audio-and-video-file-in-ffmpeg

The command will look something like this:

ffmpeg -i render.mp4 -i data/audio.wav -c:v copy -c:a aac -shortest output.mp4

I prefer to add ffmpeg to my path (google how to do this), then put the above command

into a batch file.

*/

// Minim for playing audio files

import ddf.minim.*;

// VideoExport for rendering videos

import com.hamoid.*;

// Audio related objects

Minim minim;

AudioPlayer song;

String audioFile = "audio.wav"; // The filename for your music. Must be a 16 bit wav file. Use Audacity to convert.

// image related objects

float scaleFactor = 0.25f; // Multiplied by the image size to set the canvas size. Changing this is how you change the resolution of the sketch.

int middleY = 0; // this will be overridden in setup

PImage background; // the background image

String imageFile = "background.jpg"; // The filename for your background image. The file must be present in the data folder for your sketch.

// video related objects

int frameRate = 24; // This framerate MUST be achievable by your computer. Consider lowering the resolution.

VideoExport videoExport;

public void settings() {

background = loadImage(imageFile);

// set the size of the canvas window based on the loaded image

size((int)(background.width * scaleFactor), (int)(background.height * scaleFactor));

}

void setup() {

frameRate(frameRate);

videoExport = new VideoExport(this, "render.mp4");

videoExport.setFrameRate(frameRate);

videoExport.startMovie();

minim = new Minim(this);

// the second param sets the buffer size to the width of the canvas

song = minim.loadFile(audioFile, width);

middleY = height / 2;

if(song != null) {

song.play();

}

fill(255);

stroke(255);

strokeWeight(2);

// tell Processing to draw images semi-transparent

tint(255, 255, 255, 80);

}

void draw() {

image(background, 0, 0, width, height);

for(int i = 0; i < song.bufferSize() - 1; i++) {

line(i, middleY + (song.mix.get(i) * middleY), i+1, middleY + (song.mix.get(i+1) * middleY));

}

videoExport.saveFrame(); // render a video frame

}

void keyPressed() {

if (key == 'q') {

videoExport.endMovie(); // Render a silent mp4 video.

exit();

}

}

Composing with All Sound

I sometimes get asked about my dissertation, so I wanted to write a blog post to explain it. In this post, I describe the work I did for my dissertation, which brought together web APIs and a network model of creativity.

Composing with all sound

When I was in graduate school I was somewhat obsessed with the idea of composing with all sound. When I say "composing with all sound" I don't mean composing with a lot of sounds. I mean literally all sound that exists. Composing with all sounds that have ever existed is literally impossible, but the internet gives us a treasure trove of sound.

So I looked at the largest collections of sound on the internet, and the best that I found in 2011 was the website freesound.org/. Freesound is great for several reasons.

- The library is absolutely massive and constantly growing

- They have a free to use API

- Users can upload tags and descriptions of the sounds

- Freesound analyzes the sounds and gives access to those descriptors via the API

A model of creativity

Once I had a source for sounds, all I needed was a way to connect them. Neural network research was blossoming in 2011, so I tried to find a neural model that I could connect to the data on Freesound. That's when I found Melissa Schilling's network model of cognitive insight.

Schilling's theory essentially sees ideas as networks in the brain. Ideas are connected by various relationships. Ideas can look alike, sound alike, share a similar space, be described by similar words, and so on. In Schilling's model, cognitive insight, or creativity, occurs when two formerly disparate networks of ideas are connected using a bridge.

So to compose with all the sounds on Freesound, all I needed to do was to organize sounds into networks, then find new ways to connect them. But how could I organize sounds into networks, and what would a new connection look like?

The wordnik API

I realized that I could make lexical networks of sounds. The tags on sounds on Freesound give us a way to connect sounds. For instance, we could find all sounds that have the tag "scream" and form them into one network.

To make creative jumps, I had to bring in a new data source. After all, the sounds that share a tag are already connected.

That's when I incorporated The Wordnik API. Wordnik is an incredibly complete dictionary, thesaurus, and encyclopedia all wrapped into one. And best of all, they expost it using a fast and affordable API.

Composing with all sound using Freesound, a network model of creativity, and lexical relationships

So the final algorithm looks something like this, although there are many ways to vary it.

- Start with a search term provided by a user

- Build a network of sounds with that search term

- Use Wordnik to find related words

- Build a network around the related words

- Connect the original network to the new one

- Repeat

To sonify the resulting networks, I used a simple model of artificial intelligence that is sort of like a cellular automaton. I released a swarm of simple automata on the network and turned on sounds whenever the number of bots on a sound reached a critical mass.

Results

Here are the results of my dissertation. You can read a paper I presented at a conference, and the dissertation itself. Then you can listen to the music written by this program. It's not Beethoven, but I guarantee that you will find it interesting.

Composing with All Sound Using the FreeSound and Wordnik APIs

Method for Simulating Creativity to Generate Sound Collages from Documents on the Web

Disconnected: Algorithmic music composed using all sounds and a network model of creativity